The rapid adoption of AI-powered automation in DevSecOps is like handing out power tools to highly capable interns; they have all the knowledge, but not necessarily the wisdom to use them effectively. Everything moves faster in some ways, but not always smoother. While we have reduced the sweat from toil, we have traded it for sweat from non-deterministic surprises. Threat detection, policy enforcement, compliance reporting — all streamlined, all automated, all shiny.

But let’s not fool ourselves. With every new lever of automation comes a hidden trap door. AI-driven security is great — until it isn’t. Until it starts making decisions in the dark, failing quietly, spectacularly and leaving you with a compliance nightmare that no audit committee wants to wake up to.

What happens when AI-generated security policies do not align with actual regulatory requirements? What if automated threat detection starts flagging the wrong behaviors while missing the real threats? Who is accountable when an AI-driven enforcement action locks critical teams out of production at the worst possible time?

How do we ensure AI-driven security does not turn into a black-box bureaucracy running on vibes and probability? In a zero-trust world where no one gets a free pass — not even the machines — how do security leaders keep their organizations from automating themselves into a false sense of safety?

This article will break down how artificial intelligence (AI) is reshaping DevSecOps, the security pitfalls that come with it and how to balance the raw efficiency of automation with the actual realities of risk mitigation. In highly regulated industries, compliance isn’t optional, and blindly trusting in automation is a whisker away from getting your infrastructure dismantled by an attacker, an auditor or both.

The Promise of AI in DevSecOps

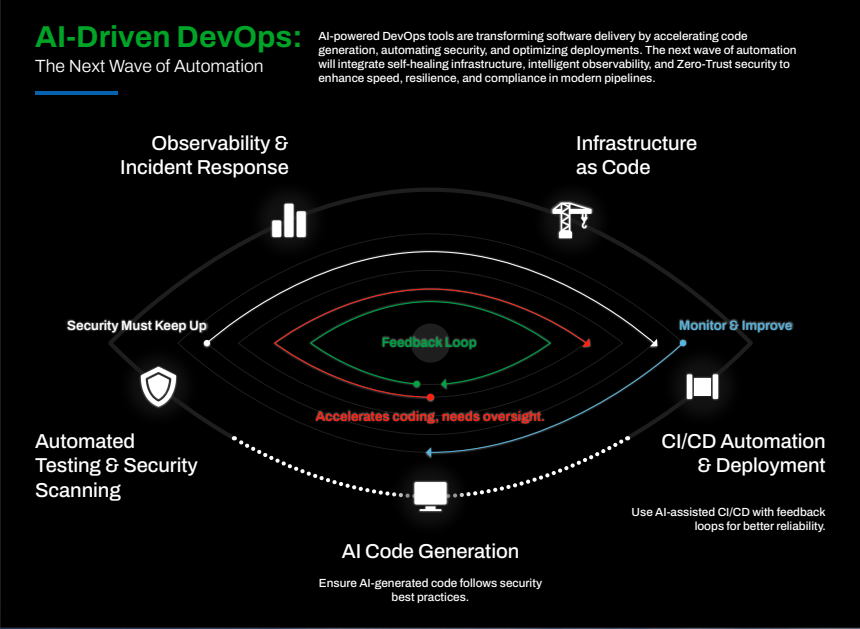

Traditional security approaches often struggle to keep pace with rapid software development cycles and cloud-native complexity. AI-powered automation is revolutionizing DevSecOps by:

- Automating Threat Detection: AI-driven tools analyze vast telemetry data to detect anomalies and predict potential breaches.

- Enhancing Vulnerability Management: AI accelerates the discovery and prioritization of software vulnerabilities, integrating security into CI/CD pipelines.

- Continuous Compliance Monitoring: AI-powered automation ensures real-time policy enforcement for frameworks such as FedRAMP, NIST 800-53, ISO 27001 and DoD SRG IL5.

- Reducing False Positives: Machine learning (ML) models refine security alerts, reducing noise and allowing security teams to focus on real threats.

Andrew Clay Shafer, a foundational voice in the DevOps movement, shared his perspective on AI in governance, risk and compliance (GRC) automation:

”I am fascinated with GRC automation — AI-driven or not. I think large language models (LLMs) are mostly good for one thing: Generating. They can be quite bad at decision-making. Some of the thinking models are improving, but in many cases, other AI/ML approaches are far more appropriate than trying to throw LLMs at everything.”

AI and the Zero-Trust Model: Challenges & Risks

As organizations embrace zero-trust security, AI-driven automation introduces both opportunities and challenges:

- AI-Driven Security: A Double-Edged Sword

While AI enhances security enforcement, over-reliance on automation can lead to blind spots, especially when dealing with zero-day vulnerabilities or adversarial AI attacks.

- Risk: As with any security system, AI-powered controls are fallible — they may misclassify threats or fail to detect novel attack techniques.

- Mitigation: Although it is a challenge, security teams’ due diligence to implement explainable AI (XAI) models that allow human analysts to understand and validate AI-driven security decisions goes a long way.

- Compliance vs. Agility: The Balancing Act

AI-driven automation ensures compliance at scale, but regulatory frameworks like FISMA, FedRAMP and NIST RMF require a careful balance between automated security enforcement and human intervention.

- Risk: Automated compliance checks may miss context-specific security gaps, leading to non-compliance in highly regulated industries (e.g., finance, healthcare, government).

- Mitigation: Organizations should integrate AI-driven GRC tools with human validation to maintain accountability and regulatory alignment.

- AI Security Models: The Risk of Bias and Exploitation

AI models trained on biased or incomplete datasets can introduce vulnerabilities into security automation. Attackers may also attempt adversarial ML attacks, manipulating AI-driven security systems.

- Risk: Poisoning attacks can corrupt AI training data, causing security models to misclassify malicious activities as benign, while model drift over time can degrade accuracy and introduce blind spots.

- Mitigation: AI-driven security solutions must incorporate continuous model validation, adversarial testing, robust data hygiene and model drift detection to prevent bias, manipulation and performance degradation.

The Power of DevOps for Rapid Development

DevOps has revolutionized software development, enabling rapid iteration, continuous integration and faster deployment cycles. By automating infrastructure provisioning, security testing and deployment workflows, DevOps teams can ship code faster without compromising security.

AI-powered DevOps, often referred to as AIOps, takes this further by leveraging ML for code generation, anomaly detection, predictive maintenance and automated remediation. However, while AI can dramatically improve efficiency, it is fallible — and its limitations in coding can introduce security vulnerabilities, compliance issues and misconfigured infrastructure if left unchecked.

Below are some common mistakes that AI makes in DevOps coding, with real-world examples and code snippets to highlight potential risks. These might seem like obvious ‘gotchas’ — things every DevOps engineer knows instinctively — but in the rush of rapid development and automation, even seasoned pros can overlook them.

- AI Generating Hardcoded Secrets in Code

AI-driven coding assistants, like ChatGPT or Copilot, sometimes hardcode API keys, credentials and secrets directly into source code, creating serious security risks if not caught during code review.

🚨 Example: Hardcoded AWS Credentials in Python

An AI-generated DevOps script might unknowingly include credentials in plaintext:

⚠️ Why is it Dangerous?

- Hardcoded secrets violate the best security practices and can be leaked in repositories like GitHub.

- Attackers scrape public repos for credentials using automated tools.

- AI-generated scripts may overlook compliance policies requiring secrets management via environment variables or vaults.

✅ Better Practice: Use Environment Variables or AWS Secrets Manager

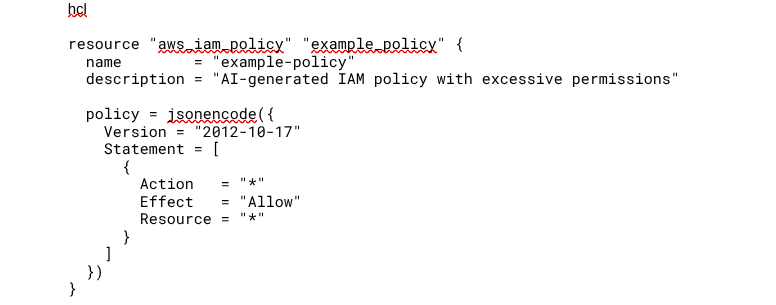

- AI Misconfiguring Infrastructure as Code (IaC) With Open Permissions

When AI generates Terraform or AWS CloudFormation templates, it often grants excessive permissions for simplicity, leading to security misconfigurations.

🚨 Example: Terraform identity and access management (IAM) Role with Wildcard (*) Permissions

AI-generated Terraform might allow full administrative access, creating a zero-trust violation:

⚠️ Why is it Dangerous?

- Grants full control over all AWS resources (high privilege escalation risk).

- Violates least privilege security best practices.

- Would fail compliance checks (e.g., FedRAMP, NIST, ISO 27001).

✅ Better Practice: Restrict Permissions to Specific Actions and Resources

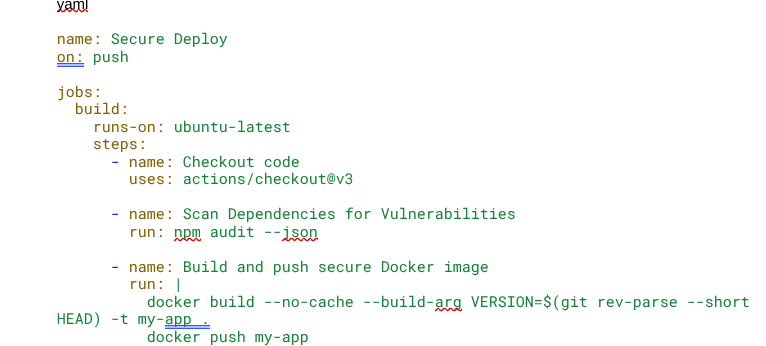

- AI Overlooking Secure CI/CD Pipeline Configurations

AI-powered continuous integration/continuous delivery (CI/CD) automation tools can inadvertently introduce insecure configurations, such as running builds as root or failing to sanitize inputs in deployment scripts.

🚨 Example: AI-Generated GitHub Actions Workflow with Unrestricted Docker Build

⚠️ Why It’s Dangerous?

- No cache or dependency verification, risking insecure dependency injection.

- The container is built with default privileges (potential root execution).

- No code scanning or security linting step before deployment.

✅ Better Practice: Hardened CI/CD Pipeline with Security Checks

Key Takeaways: AI in DevOps Needs Human Oversight

AI can accelerate DevOps, but it does not inherently understand security context. Instead, AI generalizes from training data and patterns, which may include biased, outdated, or incomplete security assumptions. While AI-driven automation can improve efficiency, it also introduces risks when blindly applied to security-critical decisions. The best approach to AI-powered DevSecOps includes:

- AI-Augmented Security Reviews: Use AI-driven static analysis and dependency scanning but ensure human validation for security-critical code changes.

- Context-Aware Access Controls: AI may suggest least privilege IAM policies, but human oversight is required to prevent misaligned permissions from past patterns.

- Dynamic Threat Detection, Not Just Static Patterns: AI excels at detecting known patterns but struggles with novel attack techniques — use continuous monitoring with anomaly detection.

- Automated Security Testing with Real-Time Feedback Loops: Train AI models with real-world security incidents to improve detection accuracy over time, preventing model drift.

- Explainability & Trust in AI Security Decisions: AI’s black-box nature can introduce blind spots — use explainable AI (XAI) techniques to validate security recommendations before applying them in production.

AI is a powerful force multiplier in DevSecOps, but it requires structured feedback, human oversight and continuous validation to avoid reinforcing flawed security patterns. Organizations that blend AI speed with human expertise will gain efficiency without sacrificing security posture.

Best Practices: Implementing AI-Powered DevSecOps Securely

To harness AI’s full potential while minimizing security risks, organizations should follow these best practices:

- Adopt a Human-in-the-Loop Approach

AI should augment, not replace, security teams. Implement human review processes for high-impact security decisions, such as automated threat containment and policy enforcement.

- Leverage XAI for Transparency

Ensure AI-driven security tools provide explainable outputs that security analysts can validate. Avoid ‘black-box’ AI models with opaque decision-making.

- Integrate AI-Driven GRC Solutions for Compliance Automation

Use AI-powered GRC platforms to automate regulatory audits and real-time compliance enforcement while ensuring human oversight in high-risk scenarios.

- Train AI Models with Secure Data & Regular Adversarial Testing

Continuously test AI models for biases, vulnerabilities and adversarial threats to maintain trust and resilience in automated security systems.

- Implement Continuous AI Security Monitoring

Monitor AI-driven security decisions in real-time, ensuring that security teams can intervene when necessary. AI security models should undergo regular retraining to adapt to evolving cyber threats.

Conclusion: The Future of AI in DevSecOps

AI isn’t magic, and is also not a security blanket. It is just another tool — one that can break things faster, at scale and with a CI/CD pipeline to failure if you are not paying attention. DevSecOps is not about automating trust; it is about eliminating blind spots. ‘Ship it and fix it later’ does not work when your AI just auto-owned production. If you are betting on AI to save you, you are already losing.

The real game is designing security that learns, adapts, and does not need a babysitter. Zero-trust is not a product, compliance is not security and ‘just use ML’ is not a strategy. AI won’t fix bad security hygiene — so, build like failure is inevitable, because it is. And if your AI starts committing to main, you might want to revoke its secure shell or secure socket shell (SSH) keys before it deploys Skynet.

Are you integrating AI-powered automation into your DevSecOps strategy? Share your insights in the comments below!