As we close out 2023, we at DevOps.com wanted to highlight the most popular articles of the year. Following is the latest in our series of the Best of 2023.

Microsoft has been doing a lot to extend the coding ‘copilot’ concept into new areas. And at its Build 2023 conference, Microsoft leadership unveiled new capabilities in Azure AI Studio that will empower individual developers to create copilots of their own. This news is exciting, as it will enable engineers to craft copilots that are more knowledgeable about specific domains.

Below, we’ll cover some of the major points from the Microsoft Build keynote from Tuesday, May 23, 2023, and explore what the announcement means for developers. We’ll examine the copilot stack and consider why you might want to build copilots of your own.

What is Copilot?

A copilot is an artificial intelligence tool that assists you with cognitive tasks. To date, the idea of a copilot has been mostly associated with GitHub Copilot, which debuted in late 2021 to bring real-time auto-suggestions right into your code editor.

“GitHub Copilot was the first solution that we built using the new transformational large language models developed by OpenAI, and Copilot provides an AI pair programmer that works with all popular programming languages and dramatically accelerates your productivity,” said Scott Guthrie, executive vice president at Microsoft.

However, Microsoft recently launched Copilot X, powered by GPT-4 models. A newer feature also offers chat functionality with GitHub Copilot Chat to accept prompts in natural language.

But the Copilot craze hasn’t stopped there—Microsoft is actively integrating Copilot into other areas, like Windows and even Microsoft 365. This means end users can write natural language prompts to spin up documents across the Microsoft suite of Word, Teams, PowerPoint and other applications. Microsoft has also built Dynamics 365 Copilot, Power Platform Copilot, Security Copilot, Nuance and Bing. With this momentum, it’s easy to imagine copilots for many other development environments.

Having built out these copilots, Microsoft began to see commonalities between them. This led to the creation of a common framework for copilot construction built on Azure AI. At Build, Microsoft unveiled how developers can use this framework to build out their own copilots.

Building Your Own Copilot

Foundational AI models are powerful, but they can’t do everything. One limitation is that they often lack access to real-time context and private data. One way to get around this is by extending models through plugins with REST API endpoints to grab context for the tasks at hand. With Azure, this could be accomplished by building a ChatGPT plugin inside VS Code and GitHub Codespaces to help connect apps and data to AI. But you can also take this further by creating copilots of your own and even leveraging bespoke LLMs.

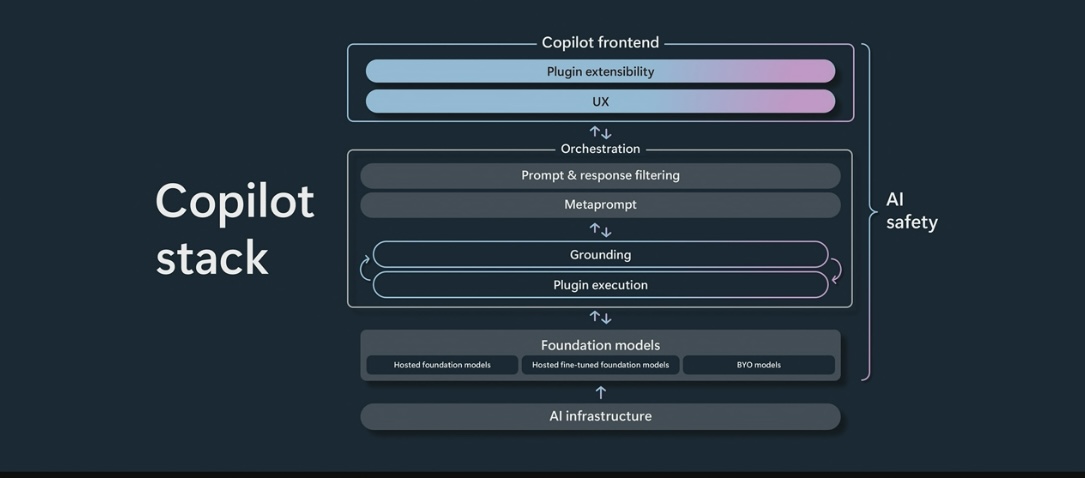

Understanding The Azure Copilot Stack

Part of the Azure OpenAI service is the new Azure AI Studio. This service enables developers to combine AI models like ChatGPT and GPT-4 with their own data. This could be used to build copilot experiences that are more intelligent and contextually aware. Users can tap into an open source LLM, Azure OpenAI or bring their own AI model. The next step is creating a “meta-prompt” that provides a role for how the copilot should function.

So, what’s the process like? Well, first, you create a new Azure OpenAI resource. Then you add a data source, which can be as simple as a PDF file or Word document. Next, you can then choose to have the generative AI augment responses or limit itself to the supplied data. Then, the service is exposable through an API to be injected into any application format. The result could be a chat assistant that loops into private data and works well with your own apps and data.

Microsoft has also built tools to keep private data safe and encrypted throughout the AI orchestration process. “AI orchestration involves grounding, prompt design and engineering, evaluation and AI safety. These are kind of the core fundamentals for how you create great copilot experiences,” said Guthrie.

You Get a Copilot, and You Get a Copilot and …

The surge of copilots comes at a time when many companies are integrating LLMs and generative AI into their offerings. These powerful AI additions have the ability to accelerate low-code development with advanced auto-complete features. And the Azure OpenAI service looks to be a promising platform to quickly spin up custom copilots.

“AI is going to profoundly change how we work and how every organization operates, and every existing app is going to be reinvented with AI, and we’re going to see new apps be built with AI that weren’t possible before,” said Guthrie.

By constructing their own copilots, organizations will continue to customize the experience with generative AI and give it more context into specific domains to solve unique pain points. And integrating copilots into the realm of end-user interfaces will help open up accessibility to AI in a broader sense.

“Software has eaten the world; now it’s AI’s turn,” said Thomas Dohmke, CEO, GitHub.