At its DASH 2023 conference, Datadog today launched Bits, a digital assistant that uses generative artificial intelligence (AI) from OpenAI’s ChatGPT platform to provide real-time recommendations to resolve issues surfaced by the company’s observability platform.

At the same time, the company announced it is extending the reach of that platform to enable IT organizations to monitor the large language models (LLMs) many are now embedding within custom applications.

Datadog also released an LLM Observability platform in beta, which brings together data from applications, models and various integrations to help engineers discover and resolve AI issues in applications such as performance degradations, drift and hallucinations in real-time.

Those extensions complement an existing ability to monitor the usage patterns of application programming interfaces (APIs) making calls to the OpenAI ChatGPT platform.

Yrieix Garnier, vice president of product at Datadog, said the generative AI capabilities in beta are enabled by data the company used to extend the core capabilities of OpenAI’s ChatGPT platform.

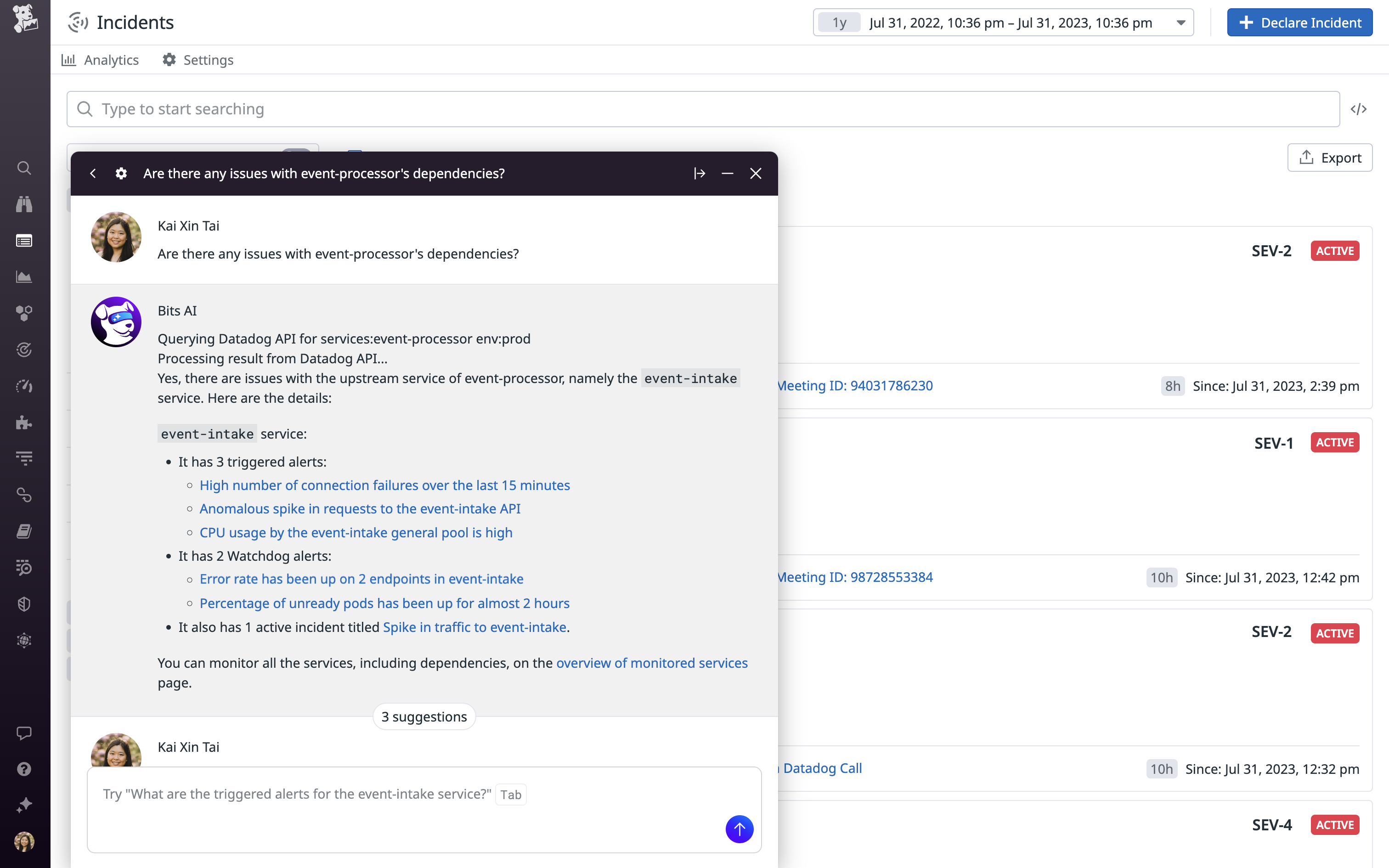

Bits AI learns from both customer data and other sources to answer queries via a natural language interface that makes the Datadog platform more accessible to a much wider range of IT professionals, noted Garnier.

The overall goal is to reduce the mean-time-to-resolution for issues by, for example, explaining errors and suggesting code fixes that can be easily shared with developers via a Slack communication channel.

Those capabilities extend the functionality of the machine learning algorithms that Datadog has already embedded within its platform, noted Garnier.

While the generative AI capabilities added to the core Datadog platform will make DevOps teams more efficient, it’s not quite clear what role those teams will play in monitoring LLMs created by data science teams. In theory, DevOps teams should be responsible for optimizing the performance of any element of an application, but most DevOps engineers today don’t tend to have much experience with LLMs that are created by data science teams.

At the very least, however, DevOps engineers should be able to share the root cause of an issue that is adversely affecting those LLMs with those data science teams.

It’s not clear how quickly LLMs will be incorporated into applications, but most DevOps teams should expect them to soon be pervasive. In effect, Datadog is now providing DevOps teams with the AI tools they will need to effectively monitor applications infused with AI capabilities. The issue is not so much whether DevOps teams will be taking advantage of AI to better manage application environments but how quickly. Given the overall level of complexity DevOps teams regularly encounter, few are likely to want to take on that challenge without some level of AI augmentation.

The challenge is determining exactly what DevOps tasks might be best handled by a machine that enables software engineers to focus on more complex issues they previously didn’t have the time to address.