Deepgram has developed a framework that leverages artificial intelligence (AI) technologies to enable developers to automate multiple tasks using a single voice command.

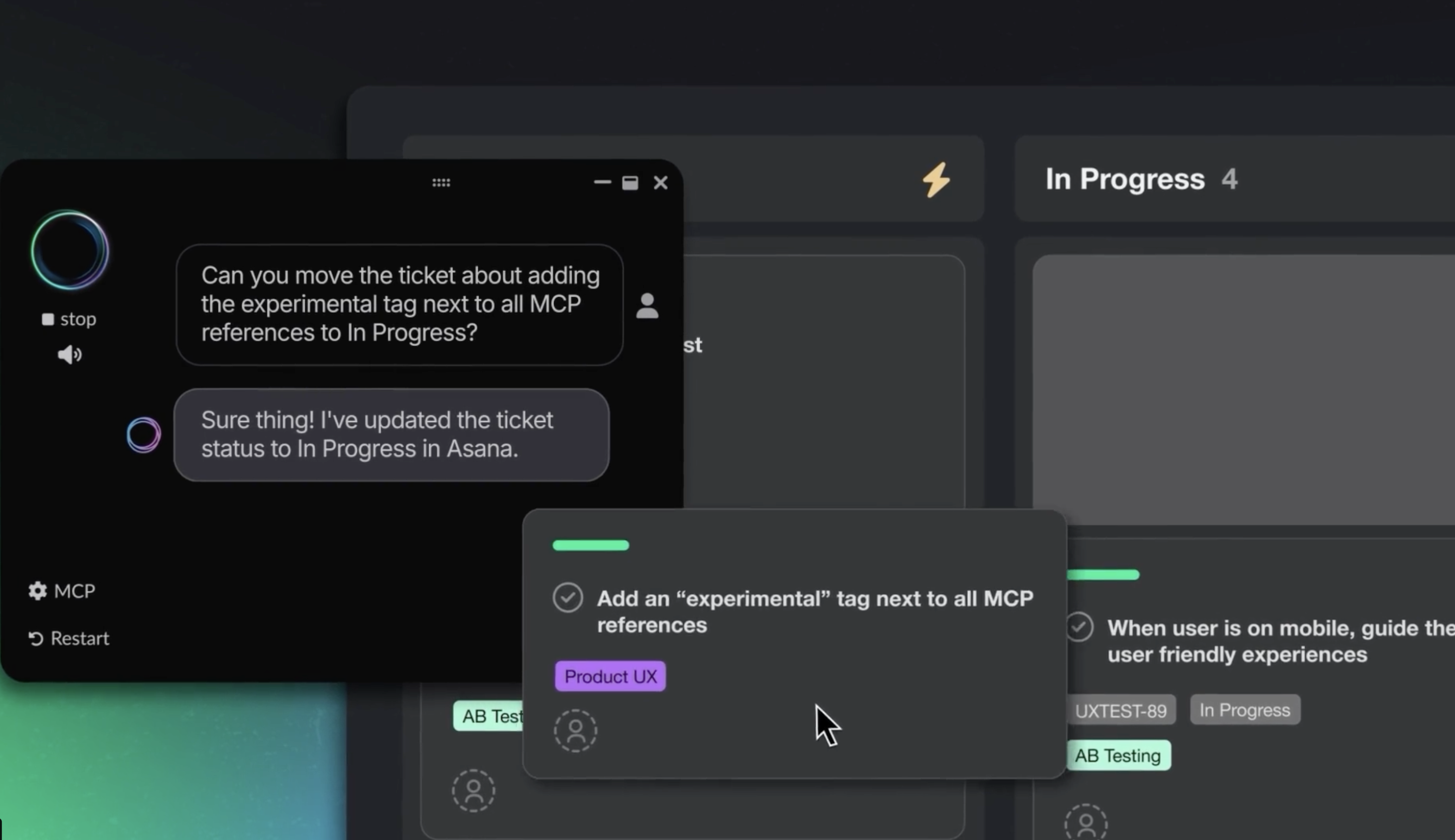

Natalie Rutgers, vice president of product for Deepgram, said Deepgram Saga is essentially a voice operating system that can be layered on top of existing tools and platform that converts natural speech into a set of prompts that invokes the Model Context Protocol (MCP) developed by Anthropic.

Via a single voice command, an application developer can verbally request, for example, to run tests, commit changes, deploy, and then update the team using any tool or platform that exposes an MCP server, thereby eliminating the need to type commands or switch between AI chat windows.

Alternatively, an application developer can now verbally describe an idea, which Deepgram Saga transforms into a set of prompts for AI coding tools to then execute. Deepgram Saga will capture that stream-of-consciousness and transform it into structured documentation, tickets or pull request descriptions.

Deepgram has been enabling developers to build speech-enabled applications for the better part of a decade but is now making an AI framework designed specifically for software engineering tasks. Deepgram Saga understands technical context, domain-specific terminology, and the nuanced terms that developers commonly use, noted Rutgers.

It’s not clear just how readily application developers might give up their keyboards, but there are a wide range of tasks that many of them would probably prefer to verbally assign to an AI agent to complete. An application developer would still need to review that output generated by those AI agents but via a voice interface it now becomes easier to chain various tasks together to create a workflow.

The pace at which application development teams are embracing AI is already accelerating. A recent Futurum Group survey finds that 41% of respondents expect generative AI tools and platforms will be used to generate, review and test code. Additionally, The Futurum Group finds that over the next 12 to 18 months organizations plan to increase spending on not only AI code generation (83%) and agentic AI technologies (76%) but also existing familiar tools that have been augmented with AI. Deepgram is essentially making a case for using a speech interface to accelerate the adoption of these tools by eliminating the need to master prompt engineering.

Each developer will need to determine for themselves the degree to which they might be willing to give up typing altogether, but the one thing that is certain is many of them will be spending more time reviewing the output of AI agents than actually writing code and creating scripts they then have to maintain. That may require a significant amount of cultural adjustment and there may still be times when it is simply easier to type something than it is to verbally explain it.

Regardless of any attachment developers might have to typing, the need to become more productive will almost assuredly force them to rely a lot less on keyboards when a faster speech interface for issuing orders to an army of AI agents is readily available.