Quiz #26 is in our series of quiz questions where we are investigating how to troubleshoot AWS CloudWatch Alarms. If this is of interest, also look at our recent quiz on AWS ELB Performance, Debugging Message Queue alert, Neptune Write IOPS Spiking, and Slow Redshift.

Quiz #26 is:

You are on-call and receive an alarm notification for your Amazon Kinesis stream. The alarm indicates “Increased PutRequestError Throttling”.

Which of the following is the MOST LIKELY initial troubleshooting step to identify the cause of this error?

Check the Kinesis shard health metrics for any degraded shards.

Increase the Kinesis stream’s write capacity units (WCUs).

Review the application logs for any exceptions related to Kinesis writes.

Restart the Kinesis stream to reset any internal throttling counters.

Investigate the application code responsible for writing data to Kinesis.

Correct Answer: 5. Investigate the application code responsible for writing data to Kinesis.

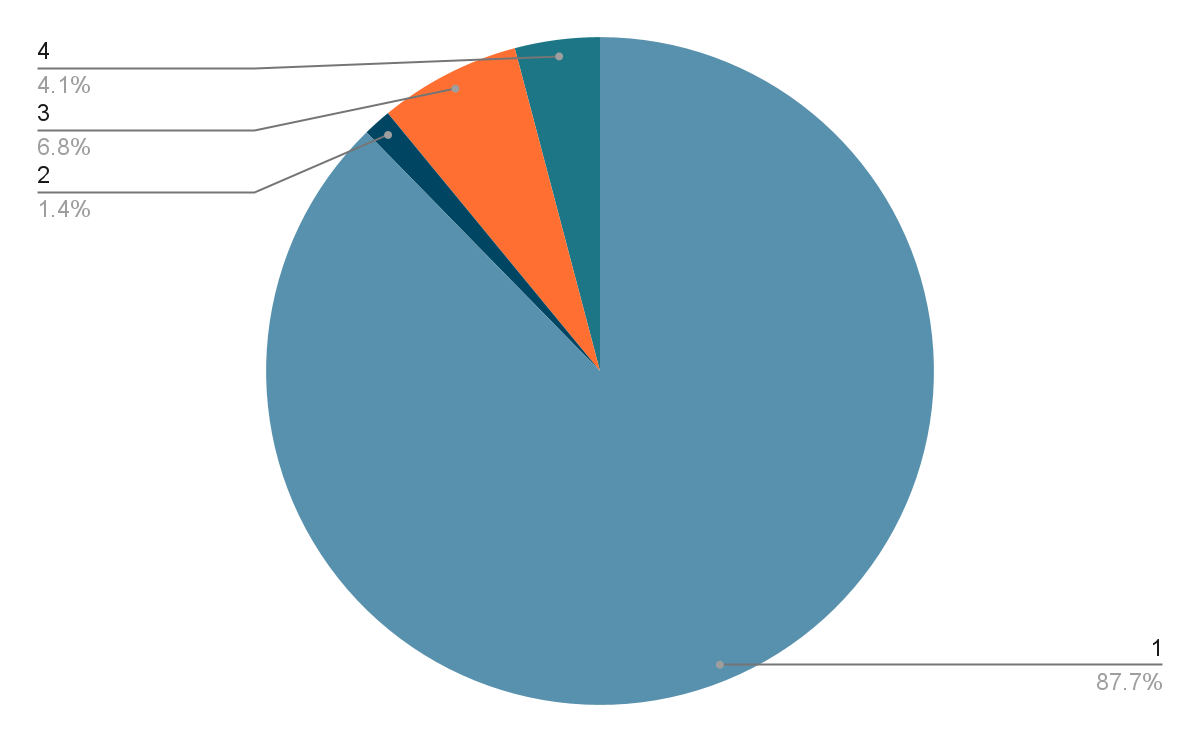

73 people answered this question and 7% got it right.

Hey there, fellow SREs! As veterans in the trenches of keeping our systems humming, we’ve all encountered our fair share of Amazon Kinesis surprises. One such head-scratcher is the dreaded “Increased PutRequestError Throttling” alarm. It can send chills down your spine, but fear not! In this blog, we’ll delve into why this throttling happens and why choice #5 is your best bet for conquering this Kinesis beast.

Understanding PutRequestError Throttling:

Imagine Kinesis as a busy highway. You (your application) are the trucks trying to deliver data (packages). To ensure smooth traffic flow, Kinesis enforces a speed limit – the allowed number of PutRequests per second. Exceed this limit, and you get flagged for throttling – essentially, Kinesis tells your trucks to slow down for a bit.

So, why do we care about PutRequestError Throttling? Because it indicates your application is pushing data faster than Kinesis can handle. This can lead to:

- Data loss: Throttled requests might be dropped.

- Increased latency: Your application waits for throttling to clear, slowing things down.

- Unnecessary costs: Scaling up Kinesis Write Capacity Units (WCUs) to compensate for inefficient writes is like adding more lanes to a highway with poorly optimized trucks!

Why Code Review is Your Debugging BFF (Not Other Options):

Now, let’s analyze the answer choices and see why choice #5 reigns supreme:

- Shard health metrics: While keeping an eye on shard health is important, it won’t tell you why your application is making excessive PutRequests.

- Increase WCUs: This might seem tempting, but it’s a reactive solution, not a proactive fix. Throwing more resources at the problem masks underlying inefficiencies in your code.

- Review application logs: Absolutely! Logs are valuable for identifying errors, but for throttling, you need to dig deeper into the code responsible for Kinesis interactions.

- Restart Kinesis stream: Restarting disrupts ongoing data flow and might not reset throttling counters. Plus, the root cause remains unaddressed.

- Investigate application code: Bingo! This is where the magic happens. Here’s where you put on your detective hat and start examining your Kinesis interactions:

- Are you batching writes efficiently? Sending data in smaller batches can significantly improve throughput.

- How are you handling retries on throttling errors? Exponential backoff is a good strategy, but avoid bombarding Kinesis with retries immediately after throttling.

- Are you using the Kinesis SDK optimally? The official SDKs offer functionalities to handle throttling gracefully. Make sure you’re leveraging them effectively.

Code Sample (Java with AWS SDK v2):

Java

import software.amazon.awssdk.services.kinesis.KinesisClient;

import software.amazon.awssdk.services.kinesis.model.PutRecordsRequest;

import software.amazon.awssdk.services.kinesis.model.PutRecordsRequestEntry;

public class EfficientKinesisWriter {

private final KinesisClient kinesisClient;

private final String streamName;

public EfficientKinesisWriter(KinesisClient kinesisClient, String streamName) {

this.kinesisClient = kinesisClient;

this.streamName = streamName;

}

public void putBatch(List records) throws ThrottlingException {

List requestEntries = new ArrayList<>();

for (Record record : records) {

requestEntries.add(PutRecordsRequestEntry.builder().data(record.getData()).partitionKey(record.getPartitionKey()).build());

}

PutRecordsRequest request = PutRecordsRequest.builder()

.streamName(streamName)

.records(requestEntries)

.build();

try {

kinesisClient.putRecords(request);

} catch (KinesisException e) {

// Implement proper backoff logic here

throw new ThrottlingException(“Throttling encountered”, e);

}

}

}

Remember: By meticulously reviewing your application code, you can identify and rectify the root cause of PutRequestError Throttling. This ensures smooth data flow, prevents data loss, and keeps your costs under control.

Call to Action (CTA):

Troubleshooting modern cloud environments is hard and expensive. There are too many alerts, too many changes, and too many components. That’s why Webb.ai uses AI to automate troubleshooting. See for yourself how you can become 10x more productive by letting AI conduct troubleshooting to find the root cause of the alert: Early Access Program.