The tally of smartphone usage worldwide is mind-numbing: Even as far back as 2012, more people on earth had access to a mobile phone than had access to a toothbrush. Even more mind-numbing must be the stats on the untold millions of those mobile device users in various states of rage at any moment while waiting for their content to finish downloading. Mary Meeker’s 2014 “State of the Web” report states 30% of all mobile phones are smartphones. The usage of the smartphones is growing in a compounded manner.

I’m confident that my own frustration resonates with those millions of users. And it’s only getting worse. I think about the plight of the next N billion mobile users who will depend on constantly overburdened mobile networks in all parts of the world.

I was fortunate in being able to turn that frustration into a mission, when I founded PacketZoom. I had developed the blueprint of an idea for a remedy while working at Google, and then spent two years to productize and trial the technology in the heavy R&D phase of PacketZoom starting in 2013. But we weren’t content to tweak the existing protocols to save a few milliseconds here and there – and neither were countless app developers around the world, who faced the same conundrum. Why not? Stats have proven that mobile downloads taking more than six seconds cause about half the users to defect from the app they are using – unaware that the network, and not the app, is to blame. And it’s well past time for incremental approaches to fixing the problem. [citation: https://blog.kissmetrics.com/loading-time/]

The turning point for me was my first-hand experience on a trip to India while trying to load the first screen of an application while on a moving subway. Only an exercise in extreme restraint prevented me from bludgeoning my phone – which, of course, was not the culprit. Clearly, the problem was unsolvable using the prevailing technology that had been jury-rigged over 40 years to serve every new use-case that came along. Maybe that time-honored, but worn-out technology had to be replaced!

The last few years we have seen an explosion of apps on mobile phones. The next few years promise to bring an order of magnitude larger number of devices in response to the “Internet of Things” phenomenon. If we continue using the same old protocols, the interminable waits for mobile content will become intolerable.

Time to Lose Four Decades of Losses

So what exactly is the problem with the legacy protocols? After all, they’ve been made to work for today’s use cases. Well, allow me to explain.

Let’s start with IP (Internet Protocol). IP addresses were intended to be solidly and reliably attached to a computer forever, in the same way a street address is solidly and reliably attached to each house. (And note that a smart phone is just another computer.) A packet sent to that address could go to only one place (the one house – or, in our analogy, the one computer). But now, in the year 2015, the IP address is but an ephemeral property of a mobile device. It’s as if everyone lives in a van now and your address depends on where you parked your van at the end of any day.

Today, the IP address of your mobile device might change while you walk from your office to the parking lot. As you move from your office WiFi to the LTE connection outside your office, and as you move through myriad disconnections and reconnections while travelling in personal or public transportation, you can be “taking” and “releasing” dozens of IP addresses during the course of your commute.

The upshot is, that when an app on your mobile device starts communicating with the cloud, every change of IP address causes the app to initiate the communication from the beginning – a so-called “cold start”), rather than from the point where the previous communication paused at the point of disconnection. That’s one very big problem, resulting in time lost through duplication of transmissions.

What’s more, each new reconnection to the network requires establishing a new TCP session, a session that utilizes the 40-year-old technology, TCP (or Transport Communications Protocol). But guess what? Each new TCP session starts cold and, according to the TCP algorithm, has to gently ramp up to full speed every single time. And the lost time due to those delays adds to the lost time created by continuous disconnections and reconnections that occur in all mobile environments.

TCP has been honed over the years to work “tolerably well” for general use over all possible kinds of networks. It was originally designed to connect huge computers, administered by professional sysadmins, and housed in meticulously maintained facilities with triple redundant power. It does the job that its least-common-denominator design is expected to do – that is, to get a stream of data from one computer to another (i.e., from one IP address to another) reliably.

Those are the guarantees of the TCP protocol. What it doesn’t guarantee however, and doesn’t even address, is speed. It uses the most conservative method possible to get your data packets from one place to another. As a result, user-level protocols (e.g., HTTP) get cluttered with hacks atop other hacks to try to work around TCP’s lack of concern for speed.

Consign TCP and HTTP to Their Rightful Place

Now imagine this conversation from sometime in the early 2000’s among boffin from companies making web browsers:

Boffin A: “TCP doesn’t allow us to use the full available bandwidth of the available channel (DSL, dial-up, or those new-fangled cable modems.”

Boffin B: “No problem! How about we open six TCP connections at the same time. Let them fight it out among each other.”

Boffin A: “Done.”

Boffin B: “We’re geniuses!”

Well, in fact, web browsers have been built around this approach ever since that ill-fated day. But the web runs on Hypertext Transport Protocol, aka HTTP, version 1.1 for the last decade and a half! HTTP, as an application-level protocol, knows nothing about speed either. And so browser vendors, over the years, have come up with a mish-mash of folklore-based tips, tricks and hacks to somehow keep this train running.

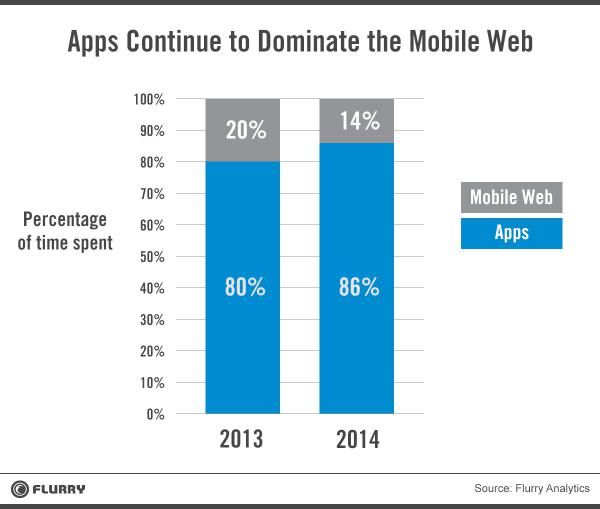

Fast-forward a bit, and a lot of these tips and hacks have been refined and codified into the latest revision of HTTP, version 2. The old protocol, the hacks to keep it alive, and now the new version of the protocol, are focused on making the web faster. The problem? Accessing the web accounts for only 14% of the uses of smartphones.

http://www.flurry.com/bid/109749/Apps-Solidify-Leadership-Six-Years-into-the-Mobile-Revolution

And therein lies the disconnect. For almost two decades the web was the dominant application platform. Gigantic hardware and software platforms (and companies) have been built to service the web. (Note how the biggest cloud platform in the world is called “Amazon Web Services”). TCP/HTTP sufficed for web access, but the constraints of what can be done in a web browser have held us back from evolving to the protocols designed for the predominant application of the Internet today: native mobile apps.

Quite simply, for any application running in a web browser, there’s nothing that you, the app developer, can do to fix the underlying network transport. But a new dawn is upon us. The dominant platform for applications is not the web anymore. It’s the native apps on mobile devices. And just like that, we’re free of the shackles of HTTP and even TCP. We, as app developers and our supporters, are free to invent protocols that make sense for this spottily connected, packet-lossy, high-latency mobile world. Evolution, by nature, works at a glacial pace. It’s now time for invention — and maybe even a few quantum leaps. Packetzoom has taken the first step towards fixing these problems by designing a more modern protocol purely for mobile use case. Learn more about it at https://packetzoom.com/learn.html. Here is a short video showing the PacketZoom difference:

About the Author/Chetan Ahuja

Chetan Ahuja is the Founder/CEO/CTO of mobile networking startup Packetzoom Inc. Prior to starting Packetzoom in 2013, Chetan worked on software and networking performance in companies such as Google, Admob and Riverbed where he gained a deep appreciation of the key role of network speed on application performance and thus on user happiness. He holds a Masters degree in computer science from Michigan State University and a Masters degree in computational chemistry from Indian Institute of Technology, Mumbai. He likes to spend the entirety of his free time watching old episodes of Monty Python and Start Trek (TNG) with his family or playing ping-pong with co-workers.

Chetan Ahuja is the Founder/CEO/CTO of mobile networking startup Packetzoom Inc. Prior to starting Packetzoom in 2013, Chetan worked on software and networking performance in companies such as Google, Admob and Riverbed where he gained a deep appreciation of the key role of network speed on application performance and thus on user happiness. He holds a Masters degree in computer science from Michigan State University and a Masters degree in computational chemistry from Indian Institute of Technology, Mumbai. He likes to spend the entirety of his free time watching old episodes of Monty Python and Start Trek (TNG) with his family or playing ping-pong with co-workers.