Algorithmia today launched a performance monitoring for machine learning (ML) model that tracks algorithm inference and operations metrics generated by the enterprise edition of its namesake platform for building these models.

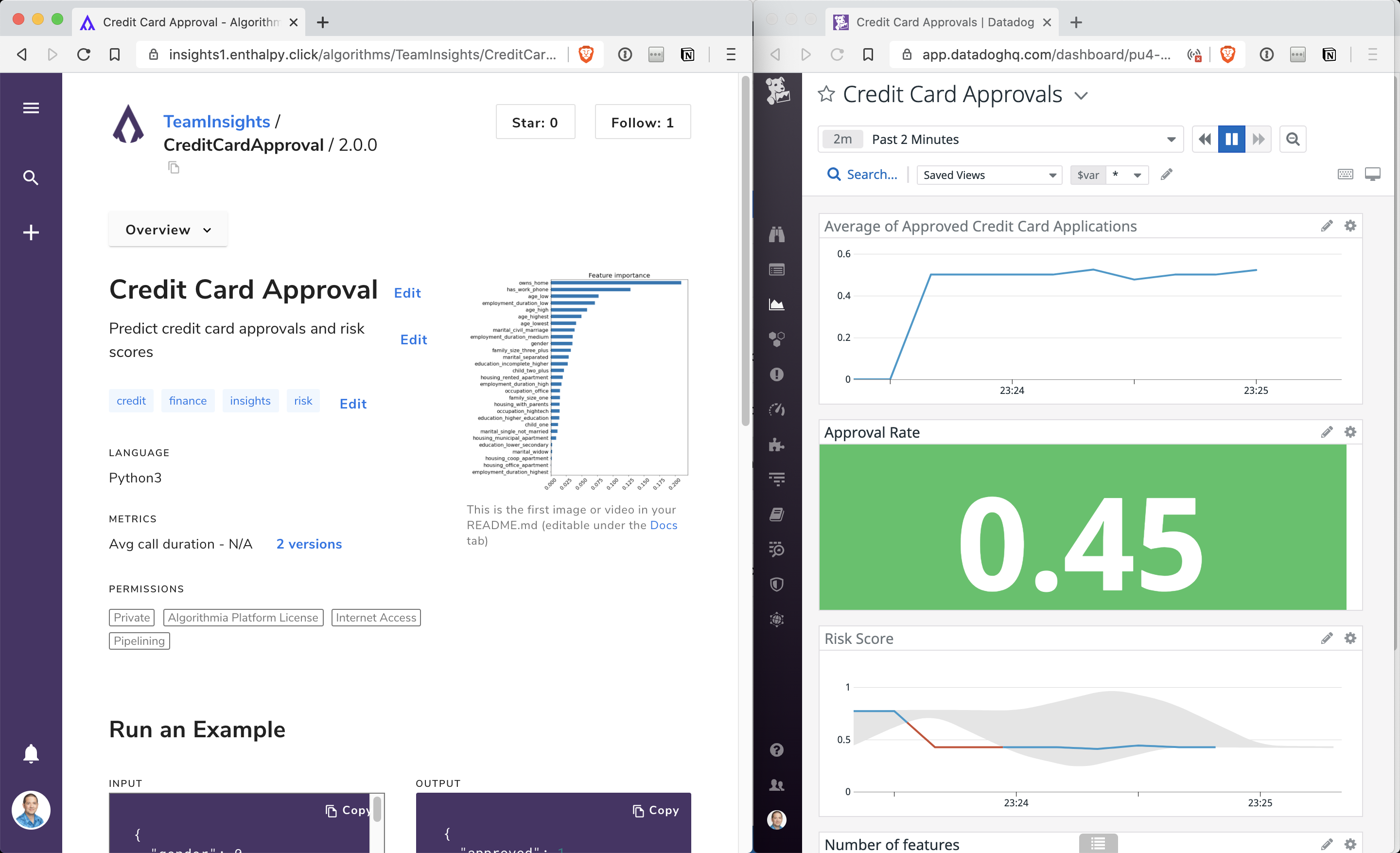

Company CEO Diego Oppenheimer said Algorithmia Insights provides a level of observability into ML models that DevOps teams have come to expect from applications. To make that monitoring capability more accessible, Algorithmia has partnered with Datadog to stream operational and user-defined inference metrics from Algorithmia to the open source Kafka data streaming platform, from which the Datadog Metrics application programming interface (API) can consume that data.

That capability will enable DevOps teams that have adopted the Datadog monitoring platform to employ a single pane of glass to both monitor their IT environments as well as detect data drift, model drift and model bias with an ML model, Oppenheimer said.

As organizations infuse ML models into applications many of them are encountering DevOps workflow challenges. It can take six months or more to develop an ML model, which then needs to be embedded with an application that often is updated several times a month. At the same time, organizations are discovering that ML models often need to be replaced either because assumptions about business conditions have changed or additional relevant data sources have become available. In many cases, data scientists have adopted DevOps principles to create a set of best practices known as MLOps to update their models.

The alliance with Datadog is significant because it enables IT teams to start melding DevOps and MLOps processes, noted Oppenheimer.

At this juncture, it’s apparent that almost every application to varying degrees will eventually be enhanced using machine learning algorithms. Most of the data scientists that create these models, however, have little experience deploying and updating ML models in a production environment. It’s now only a matter of time before ML models become just another artifact flowing through a DevOps workflow. Organizations that have invested in ML models, however, will need to spend some time bringing together a data scientist culture that is currently distinct from the rest of the IT organization.

To further that goal, Algorithmia earlier this year made it possible to write and run local tests for algorithms as shared local data files. Desktop tools that have been integrated with that process include PyCharm, Jupyter Notebooks, R Shiny, Android, iOS, Cloudinary, Datarobot and H2O.AI.

A recent survey of more than 100 IT directors conducted by Algorithmia finds the COVID-19 pandemic has required 40% of survey respondents to make adjustments to at least half of all their AI/ML projects either in terms of priority, staffing or funding. Over half the respondents (54%) said AI/ML projects prior to the pandemic were focused on financial analysis and consumer insight. In the wake of the pandemic, survey respondents said more of their AI focus is now on cost optimization (59%) and customer experience (58%).

The survey also notes respondents were spending at least $1 million annually on AI/ML prior to the pandemic, with 50% saying they are planning to spend more than that going forward. Overall, nearly two-thirds (65%) said that AI/ML projects were at or near the top of their priority list before the pandemic, with 33% now saying these applications are now higher on their list.

It may be a while before every DevOps team regularly encounters ML models, but at this point, it’s more a question of when rather if before DevOps processes expand to include ML model artifacts.