Amazon Web Services (AWS) this week added support for the emerging Model Context Protocol (MCP) to Amazon Q Developer, a suite of artificial intelligence (AI) agents for application developers that can now be more easily integrated with other AI tools and data sources.

Adnan Ijaz, director of product management for developer agents and experiences at AWS, said support for MCP in the command line interface (CLI) that AWS makes available to developers to access any MCP server. That capability will also soon be extended to the integrated development environment (IDE) that AWS provides for Amazon Q Developer.

Originally developed by Anthropic, MCP enables two-way connections between data sources and AI tools. Cybersecurity and IT teams can both expose data through MCP servers or build AI applications, also known as MCP clients, that connect to these servers. That approach makes it possible to query internal systems without blindly scraping data or exposing backend systems. In effect, an MCP server acts as an intelligent gateway, translating natural language prompts into authorized, structured queries.

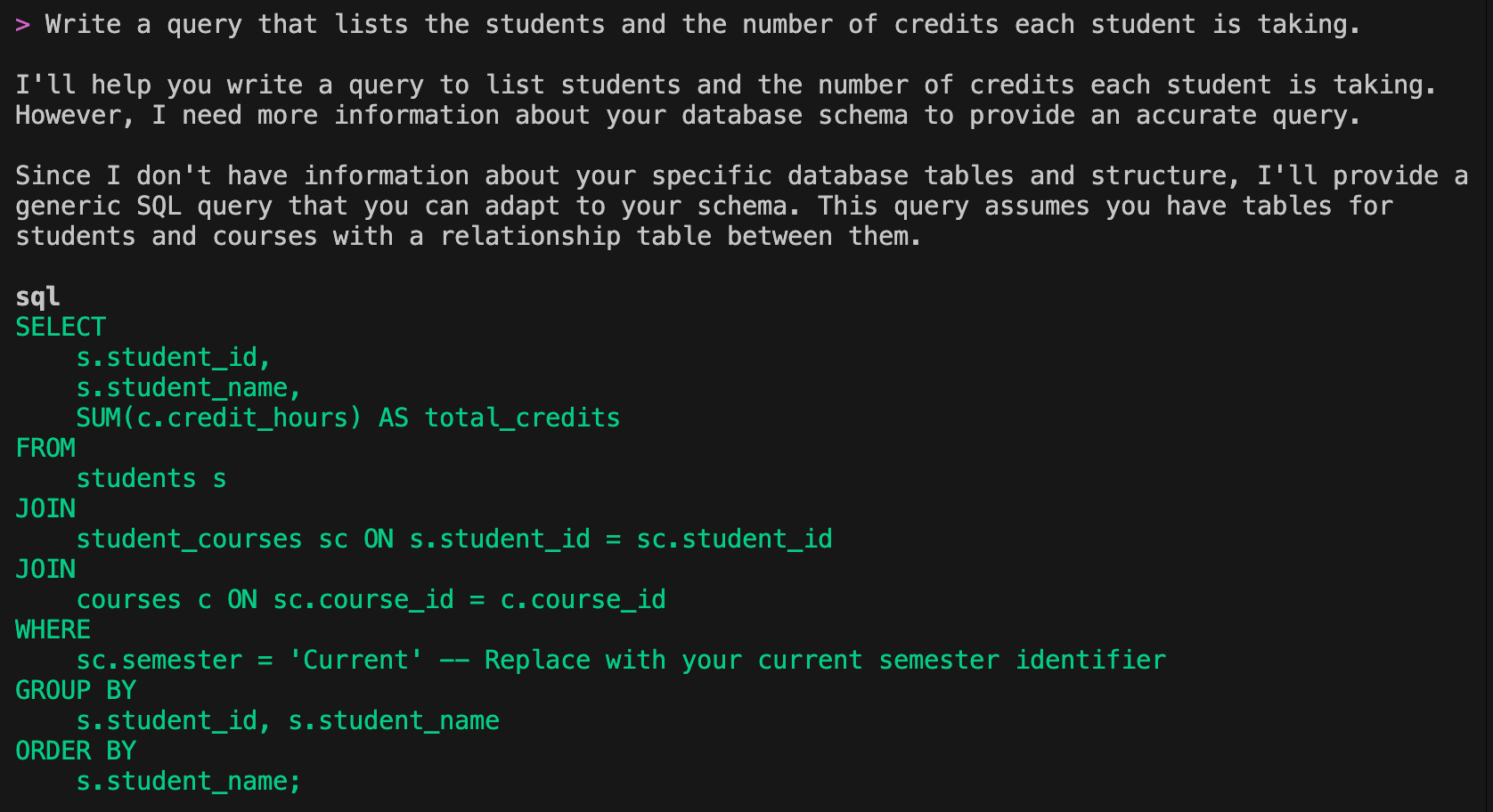

For example, developers can via MCP in addition to describing AWS, also describe database schemas to build an application without having to directly invoke a specific variant of SQL or write any Java code that might be required.

The ultimate goal is to reduce the current level of bespoke connectors that would otherwise be needed to achieve the same level of integration, said Ijaz.

Exactly how much AI agents are now being used to build software is unclear, but a Futurum Research survey finds 41% of respondents now expect generative AI tools and platforms will be used to generate, review and test code. The one certain thing is that the volume of code being created is going to continue to increase exponentially in the months and years ahead as AI agents are integrated into software engineering workflows.

Each organization will, as a result, need to determine for itself to what degree to rely on AI agents to build and deploy applications. The quality of the code being generated by AI tools can vary widely, and in many instances, organizations are hesitant to deploy code in production environments unless a human developer thoroughly understands how it was constructed.

Nevertheless, as AI coding continues to evolve, the productivity gains are too much to ignore. Application development teams, at the very least, should by now be experimenting with various approaches, especially as it becomes simpler to combine multiple tools to build the next generation of AI-infused applications.

In the meantime, the pace of AI innovation is only going to accelerate. Before too long, the code surfaced by AI tools will steadily improve. DevOps teams will soon find themselves building, deploying and updating a wide range of applications at levels of scale that a few short years ago might have seemed unimaginable.

The issue now will be determining to what degree the pipelines currently used to drive the workflows relied on to manage DevOps processes might need to be revamped to accommodate all that code.