This is part three of a series of three articles on building and delivering amazing apps at the edge. Read part one here, and part two here.

Introduction

There are millions of apps available today, and it’s incredibly easy for any user to download and install any of them with just a few taps on a screen or click of a mouse. If you want to build and deliver an app that will amaze users, you need to be able to differentiate from the rest of the pack.

Over the past 14 years, I’ve been working closely with the top companies delivering the most popular apps, helping them optimize their apps for speed and scale so users get a great experience. In this three-part series, we’ll explore the fundamental steps you must take to build and deliver your own amazing app at scale that will keep users coming back for more.

We’ll break these steps down into three key areas:

- Front end: Covers the parts of the app that end users interact with.

- Back end: Looks at the required origin infrastructure to support the app, including databases, application, web servers and more.

- Network: The glue that connects the front end and the back end.

In the final article of this series, we’re going to explore four things you can do on the network to optimize your app and make it more amazing:

- Distributed DNS

- Protocol optimization

- Latency optimization

- Edge computing

Distributed DNS

The Domain Name System (DNS) is a critical part of making sure your clients can access your applications (in fact, it’s a very popular attack vector by hackers today because if your DNS is down, nobody can access your PPP). Distributing your DNS across many servers can help ensure that your DNS (and thus, your app) is fast and highly available, so you should use a DNS vendor that has a large DNS server network in a wide range of locations.

Validating whether you have a properly distributed DNS infrastructure is rather easy. Just follow these steps:

- Identify how many name servers your domain uses and their IP addresses. Ideally, you want a number between three and 12—the more the better. You can use command-line tools such as dig and nslookup to identify the name servers of a given domain.

- Use an IP geolocation system to find out the physical location of the name servers. At this point, you can get a rough idea if those servers are close enough to your end users. Hint: Some CDNs offer diagnostic tools that can be scripted via APIs to automate IP geolocation.

- If you want to be more thorough, you may want to run connectivity tests between the main locations of the users and the name server IP addresses to make sure the network latency—i.e., how long it takes for information to go back and forth between the client and the server—stays under 100 ms (the lower the better). Some internet providers and CDNs provide looking glass technology, which enables you to run connectivity tests that originate from servers around the world.

Protocol Optimization

Once your clients know where to connect, how they connect and the protocol used is something that shouldn’t be neglected. HTTP/2 is the modern, superior network protocol, as it uses a more efficient binary format, header compression, single network connection, stream multiplexing and server-push. Actually, I was very involved in the early years of HTTP/2 (the standard was approved in February 2015) and have since helped some of the largest websites on the internet implement HTTP/2 and still spend a considerable amount of my time testing the new protocol in all sorts of environments. I’ve shared my findings in talks at conferences including Velocity and in the “Learning HTTP/2” O’Reilly book, which I co-authored. In a nutshell, most sites can improve page load performance between 5% and 15% by just switching the protocol from HTTP/1.1 to HTTP/2, which is as simple as flipping a switch if your website uses HTTPS.

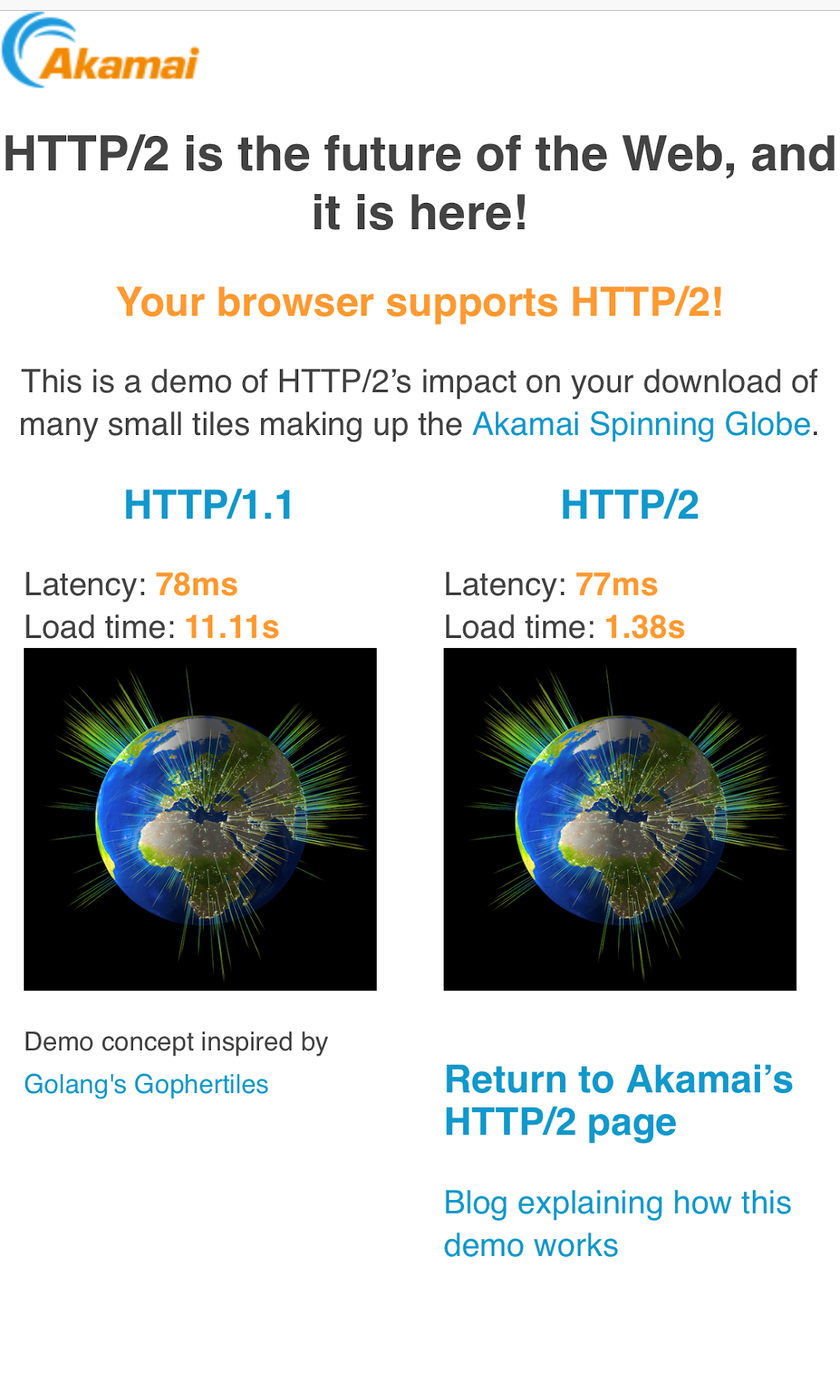

To illustrate that improvement, I created a demo site that tests the download speed of many small images over HTTP/1.1 and over HTTP/2 and displays the differences.

As you can see in Figure A, the content loaded eight times faster over HTTP/2 than over HTTP/1.1 on my mobile phone over an LTE connection. That’s a huge improvement considering how simple it is to implement HTTP/2.

According to the HTTP Archive, as of June 2018, 20% of hosts support HTTP/2, but those hosts are responsible for 40% of all requests—meaning HTTP/2 powers some of the web’s most popular sites.

However, as explained in the HTTP/2 Performance chapter of the Learning HTTP/2 book by O’Reilly, there are a lot of factors that can impact the benefits of HTTP/2. Some of them you can control, while others—such as network packet loss—are much more difficult to tackle and can impact HTTP/2 performance because HTTP/2 runs over a single TCP connection.

Note: TCP stands for Transport Control Protocol and some parts of its implementation (e.g., slow to start and cumbersome network congestion algorithm) can impact HTTP/2 performance because all the communication with the server goes through a single TCP connection.

The future of the web is HTTP/3, which uses HTTP/2 over QUIC (a newer and more efficient transport layer protocol). However, as of April 2019, HTTP/3 is still in draft state and hasn’t been approved as a standard.

Ideally, your servers should monitor the network connection and use different network protocols depending on the current conditions. Certain content delivery networks (CDNs) offer this functionality. For example, to maximize throughput, a top CDN provider uses custom software on its edge servers to dynamically use different network protocols, TCP settings and congestion control algorithms, depending on the specific conditions of each individual connection the server makes with an end user. In short, using the optimal protocol makes your app faster and more amazing.

Latency Optimization

Latency is a physical limitation of nature that impacts the throughput on computer networks. You probably know that whenever you are outdoors and there is a storm with lightning, you can count the number of seconds until you hear the thunder to identify how close the storm is (and if you count less than a second, you better get inside!). In computer terms, latency is measured as the time it takes to send a network packet back and forth between two systems. This concept is known as round-trip time (RTT) and is typically measured in milliseconds. You can use network tools such as ping or mtr to easily measure the RTT between two systems.

Latency has a huge impact on performance because the further away you are from the server you want to connect, the slower the communication will be. As a rule of thumb, you want to ensure your latency is well under 100 ms. The easiest way to achieve that is by leveraging the local device cache to avoid extra network connections to the server and using a CDN to put your server closer to the end user.

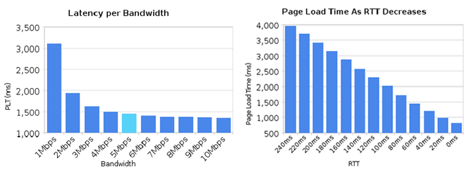

Here is a graph (see Figure B) showing that latency plays a larger role on page load time than bandwidth. As you can see, doubling the bandwidth from 5Mbps to 10Mbps results in just 5% improvement in page load times, and any bandwidth increase over 10Mbps doesn’t make a big difference. Reducing the latency, however, drastically improves the page load times. That makes your app more amazing for your end users.

Edge Computing

If you are a developer or a DevOps engineer (or just love tech) chances are you are within the TOP 1% of world population in terms of mobile computing power. But things you take for granted, such as resizing images on a mobile phone, are going to take much longer for many millions of internet users who have less powerful mobile devices.

So, what can we do if running logic at the origin causes delays because of the network latency and running logic on the client side may be too slow on less powerful devices? The solution is edge computing, which consists of moving the origin logic to a server close enough to the end users that latency does not impact performance. CDNs are in the best position to offer this proximity because of their natural distributed architecture; a CDN can set cookies, rewrite HTML and resize and deliver optimized images for the screen size of your users so those images don’t take a long time to display on low-end mobile devices with limited CPUs.

Edge computing is the next step beyond cloud computing. You should try to use a CDN company that offers you the ability of running business logic as close as possible to where your users are, to ensure that your app is fast and can scale without impacting your infrastructure.

Summary

In this series of articles, we reviewed some basic things you should consider if you want to build and deliver amazing apps at scale. This is not a comprehensive list of all the things you can do but provides you with some of the most impactful areas for app improvement.