Nebulon has emerged from stealth to launch a hybrid cloud computing platform that enables workloads to be processed in the cloud while data continues to be stored on a local application server.

Company COO Craig Nunes said the Cloud-Defined Storage platform employs PCIe cards embedded with a Nebulon Services Processing Unit (SPU) based on an ASIC to connect local storage systems to a cloud-based control plane dubbed Nebulon ON that can span Amazon Web Services (AWS) and Google Cloud Platform (GCP) services.

The PCIe card is installed in an application server residing in a local data center, where it connects to solid-state drives (SSDs) installed on the server. The Nebulon SPU offloads compression, encryption, deduplication, erasure coding, snapshots and data mirroring.

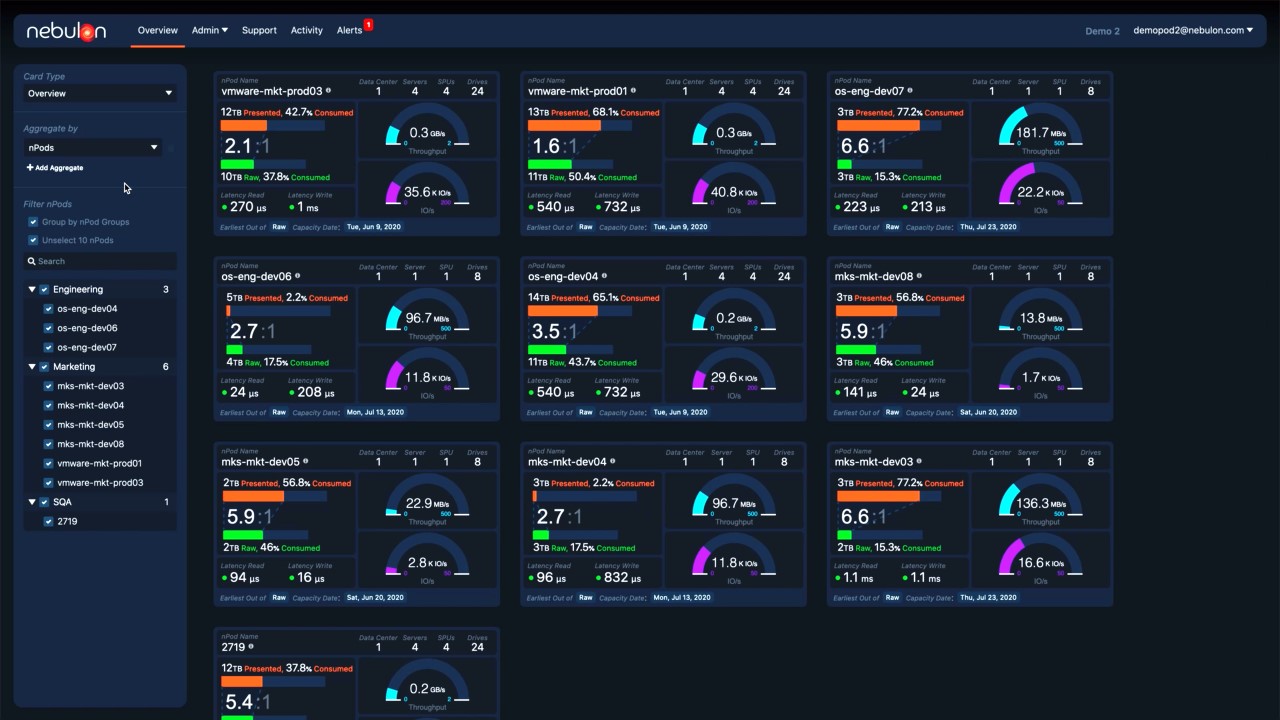

The Nebulon SPU also transmits storage, server and application metrics to Nebulon ON, where machine learning algorithms are applied to surface recommendations to improve performance.

Nunes said both Hewlett Packard Enterprise (HPE) and Supermicro have already agreed to resell the Cloud-Defined Storage platform.

At its core, the Cloud-Defined Storage platform takes hyperconverged infrastructure (HCI) to another level. Instead of combining compute and storage in a local data center, however, compute processing is shifted to the cloud, said Nunes. That approach, he noted, makes it possible to take advantage of inexpensive compute resources in the cloud without losing control over data.

In addition, Nunes noted, the Cloud-Defined Storage platform ensures application service levels are maintained while reducing costs by eliminating the need to transfer massive amounts of data into and out of the public cloud.

Organizations that work within highly regulated industries can now take advantage of cloud resources while continuing to meet compliance requirements, he added.

Finally, the Nebulon Cloud-Defined Storage platform can support both monolithic and microservices-based applications regardless of the type of virtual machine or container employed.

At a time when many organizations are under increased pressure to reduce the total cost of IT, there’s a lot more interest in ways to leverage cloud computing to reduce costs. However, for many organizations, moving data into the cloud is problematic for a host of performance, security and compliance issues.

A hybrid cloud computing architecture that doesn’t require data to be stored or moved into and out of a cloud could provide a way for organizations to re-evaluate their overall cloud computing strategies. Organizations beyond those in highly regulated industries could conclude they would prefer to retain control over their data.

Cloud service providers are, of course, banking on massive amounts of data being stored in the cloud. They have long argued that as the amount of data stored in the cloud exponentially increases, the more sense it makes to deploy applications in the cloud as close as possible to that data. Each new application deployed in the cloud then increases the amount of data gravity that cloud exerts on other application deployment decisions.

It’s not likely hybrid cloud platforms such as the Nebulon Cloud-Defined Storage system will completely reverse that trend. However, it does signal that data gravity in the cloud may not be as inexorable a force as many cloud service providers have long assumed.