New Relic this week added additional artificial intelligence (AI) capabilities to its observability platform that make it possible to detect and resolve alert coverage gaps.

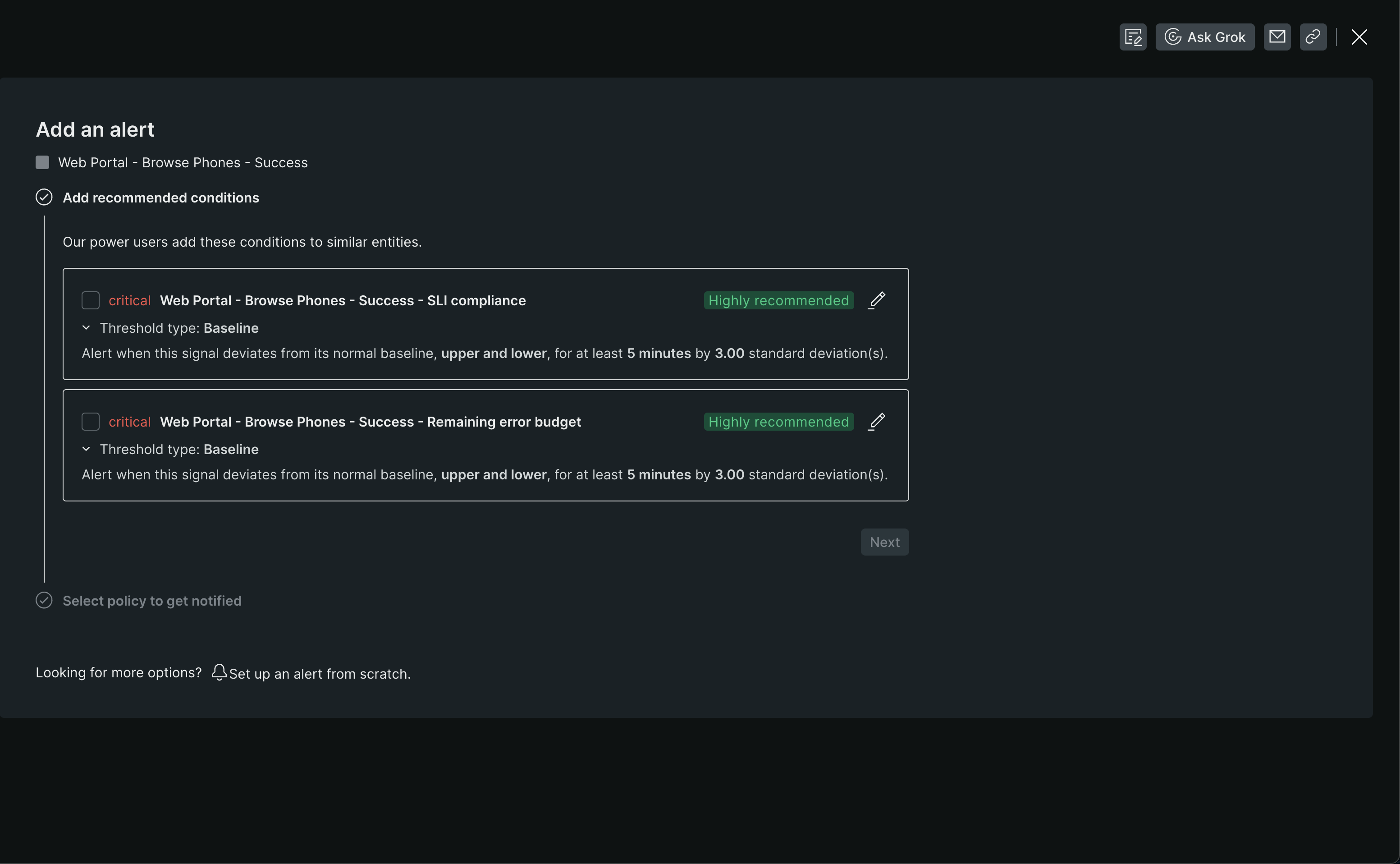

Camden Swita, a senior product manager for New Relic, said it’s not uncommon for DevOps teams to discover an issue that the existing alerts they put in place were not designed to address. New Relic is adding machine learning algorithms at no additional cost that are designed to identify anomalies indicative of those potential issues. The platform then surfaces recommended alerts that DevOps teams can apply or further customize as they see fit, he added.

Those machine learning algorithms ensure DevOps teams are tracking the right thresholds to ensure there are no blind spots that could adversely affect application performance, noted Swita.

New Relic is employing both predictive and generative AI to enable DevOps team to detect and resolve issues faster. The company has been using machine learning algorithms for predictive AI for several years and, more recently, started providing early access to a generative AI tool dubbed Grok.

The overall goal is to make the capabilities of an observability platform more accessible, Swita said. This is accomplished by surfacing issues without requiring a software engineer to craft intricate queries to interrogate metrics, logs and traces, he noted.

The AI capabilities also serve to reduce the amount of alert noise; it’s now possible to more easily understand the root cause of an issue and reduce the total number of alerts that might otherwise be sent, he added.

While there’s no doubt AI will help democratize DevOps by making it possible to write scripts and better manage workflows, it might be a while before DevOps teams trust the recommendations made. AI models typically require some time before they understand how an IT environment functions, and not every initial recommendation may be valid. In time, however, DevOps teams will find themselves increasingly supervising processes that AI models are executing.

In addition, there are competing approaches to building large language models (LLMs) that drive generative AI. For example, it’s not clear whether chaining LLMs together will be more efficient than creating a single large ‘parent’ LLM that has ‘child’ relationships with smaller LLMs, noted Swita.

In the meantime, DevOps teams would be well-advised to list tasks that are likely to be automated by AI models. Many of them will tend to be manual tasks that most software engineers don’t especially relish, so in that regard, there’s naturally a lot of interest in AI. It remains to be seen, however, whether various forms of AI might one day automate an entire DevOps workflow. In fact, most DevOps engineers are going to set a high bar for AI models that, at the very least, will be expected to perform a task as well as humans before it’s trusted completely.