DevOps is a natural target for AI-driven efficiencies, as it involves frequently repeated processes that generate mountains of data. It seems reasonable to expect that, like other domains that require decisions to be made based on large volumes of data, AI will play an important role in DevOps, too.

Definitions of AI vary considerably, so you can’t be blamed if you’ve sat through a discussion of AI and DevOps and still don’t understand exactly how the two intersect. But the bottom line is that AI will prove most useful in situations where there’s lots of data generated by, or passing through, a repeatable process. Humans are pretty good at identifying heuristics to help them make reasonable decisions based on patterns in data. But machine learning (ML) techniques bring with them the promise of teasing out inherent characteristics that underpin the data, and that are often impossible for humans to observe.

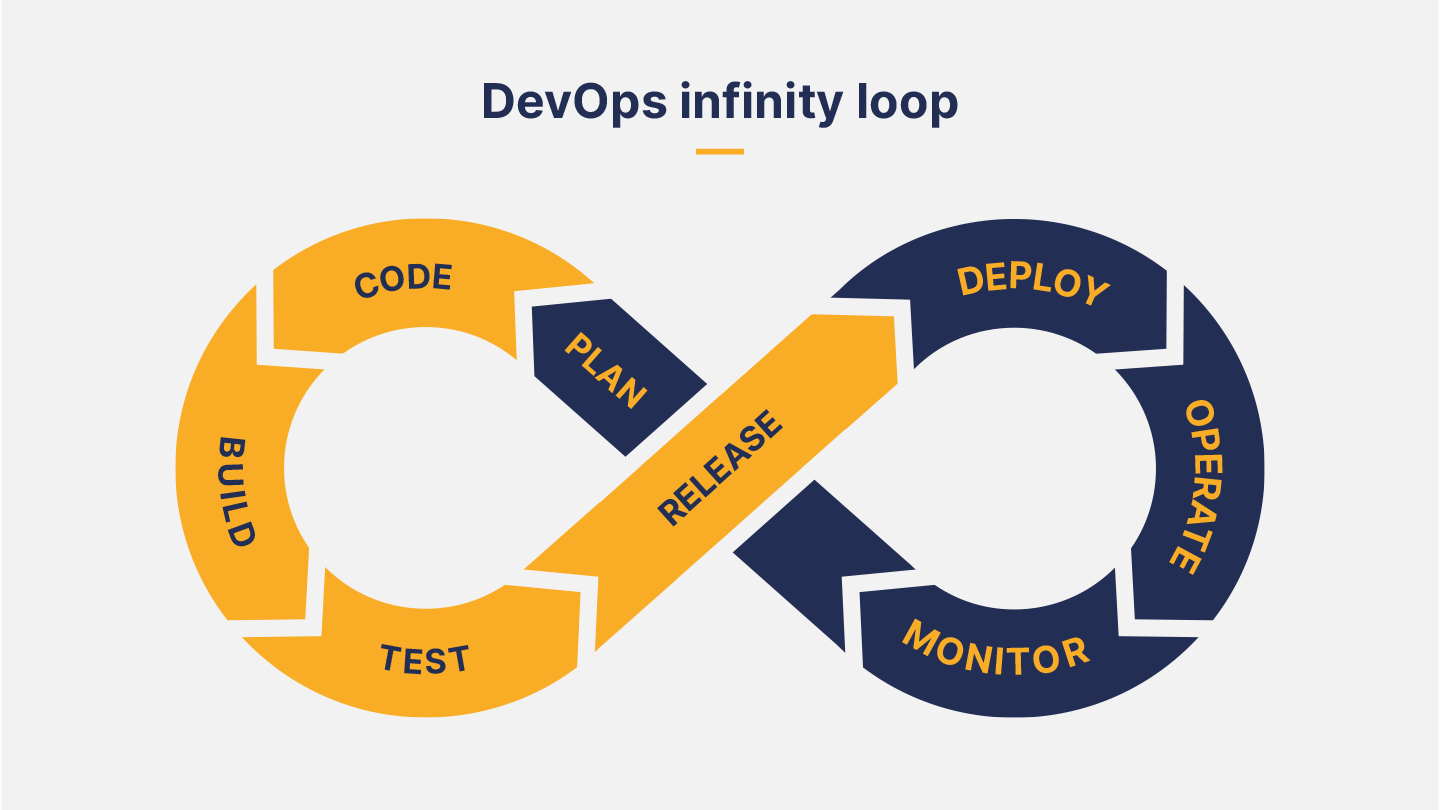

There are two main points in the DevOps process where large amounts of data are generated, and where machine learning would be most useful when applied: testing and release and monitoring.

Testing and Releasing

Deployment packages: AI can analyze trends in what is actually being deployed. My company, Gearset, is a DevOps solution for development on the Salesforce platform. In that context, AI could track the items of Salesforce metadata a team usually deploys, and optimize requests for retrieving that metadata from its org based on those items, speeding up comparisons and deployments, and, ultimately, release cadence.

Deployment errors: At Gearset, increasing deployment success rates is a constant focus. To that end, we aggregate and analyze data from deployment errors to identify the most common deployment-blocking errors. We then build problem analyzers that suggest automatic fixes to users. With machine learning running on telemetry data, we could more rapidly identify other patterns of use that trigger common deployment errors, and suggest new problem analyzers we should build, boosting deployment success rates still higher.

Static code analysis: Static code analysis generates data about the quality and security of new code, judged against a ruleset. Within Gearset, Salesforce developers can choose and customize the PMD rules they want in their ruleset, so they’re warned about the code quality issues they care about. But richer insights would be possible with AI, such as high-priority areas for improvement and refactoring based on trends in code quality and security.Monitoring

Infrastructure monitoring. Huge amounts of data can be generated from DevOps monitoring. It’s easier to know what to focus on with AI-driven insights into performance log monitoring. Pattern recognition can also predict usage growth and help with capacity planning.

Security. This has been a key area for innovation in recent years, with the development of products that use machine learning to enhance threat detection, intrusion detection and vulnerability database compilation.

Unit testing. Gearset provides tools for monitoring code in Salesforce, such as automated unit testing, which reveals when code is no longer executing as intended. AI could identify patterns in the logs and suggest areas of the codebase that appear to require attention.

Change monitoring. Changes in the codebase, whether expected or unexpected, are tracked by change monitoring and backup jobs. Gearset currently has smart alerts on backup jobs that users can manually set up to be warned of changes or deletions that are unusual, both in terms of the kinds of records being deleted and how many. But AI could automatically spot anomalous and unusual patterns of churn that should be investigated.

In summary, AI promises to accelerate innovation in the areas where we currently use data-driven insights to improve the performance of DevOps tools and processes. The result will be even higher deployment success rates leading to increased agility, better code quality and performance, and enhanced security.