We are living in an information- infused society, much dependent on various technological innovations taking place all around us. In such conditions, as consumers become more connected, they are empowered with more information and choices. They expect quick and consistent information available at all times. Likewise, our business leaders expect their IT teams to build relevant applications and stay ahead of their customers’ needs with an excellent customer experience.

My IBM colleagues and I have been working very closely with major enterprise clients as they begin their DevOps transformational efforts. We’ve found few key points and messages that resonate very well with decision makers who need to set a new course for their teams in the wake of impact from mobile, big data, analytics, cloud, and the Internet of Things technologies.

So what is it about DevOps that is so appealing? First and foremost is the notion that DevOps is all about attaining Speed to Value. Speed is king right now because so many of the business leaders we work with are under the gun to deliver value as quickly as possible. Yet, because of the high cost of technical debt they have come to a state where speed has to be sustainable. It’s no longer easiest to go outside their organizations for the high priority key projects as mobile applications were done recently. They need a holistic approach that builds on their existing capabilities.

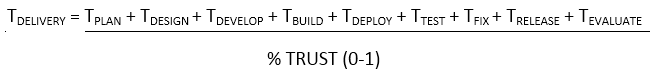

Well what does that have to do with math? Here’s the thing, everyone is trying to minimize the time it takes to deliver value and attain feedback. We can think of the Time to delivery as the following equation.

TDELIVERY = TPLAN + TDESIGN + TDEVELOP + TBUILD + TDEPLOY + TTEST + TFIX + TRELEASE + TEVALUATE

In an optimal world or software factory that is fully automated the TDELIVERY is optimized by minimizing the time for each of the tasks required to complete the delivery. Most organizations follow a SDLC that is based on this simple math. They estimate the time to do each part and then the total project plan is the sum of the parts. Further, they tend to define work in relatively large chunks or big-up-front style of work definition which inherently increases the time for each task (TX). As a result, the industry has about a 50% (or worse) failure rate when it comes to completing a project within the estimated time. That causes a lot of hardship because the business can’t count on IT to deliver value to customers in the projected time frames let alone with speed.

Because application delivery is a set of very human oriented tasks, a key factor that will determine the speed to value is TRUST. It’s pretty obvious when you draw out the value stream mapping of how work gets done at a given customer site. As members of software delivery teams lose trust in the validity of the work as it flows through the lifecycle then a large amount of rework and waste is introduced. In mathematical terms our equation becomes:

That is, the Tasks we do in a delivery cycle are impacted by the degree of trust we have in the hand-offs from one to another. If we have zero trust then our TDELIVERY will be infinite (divide by zero). 100% trust and our TDELIVERY will be only limited by how fast each task can be performed.

Check this scenario that represents what we’re hearing from our clients in the workshops:

“On a given project team the plan has about a 50/50 chance of being right, and the design is about 85% right, and the developers will get about 90% of the implementation right, and the testers have about 90% of the test cases right, and the release team has about 95% reliability of having the right stuff together to release, then your trust factor is something like

.5 x .85 x .9 x .9 x .95 = 32% Trust” (and that’s much better than what we usually hear)

Ouch! That’s a 3x multiplier of the time it’s going to take no matter how fast we do all the delivery tasks. In other words, we’re always limited by our trust factor. That multiplier comes into play with additional tasks we have to add into the equation to counterbalance the lack of trust. Then our equation becomes something like this:

TDELIVERY = TPLAN + TRESCOPE + TDESIGN + TARCH REVIEW + TDEVELOP + TTECH DEBT + TBUILD + TREBUILD + TDEPLOY + TREWORK + TTEST + TRETEST + TFIX + TREFIX + TRELEASE + TROLLBACK + TRE-RELEASE + TEVALUATE

And that’s the issue our customers are facing today. There are a lot of wasteful tasks, checks, and balances that have crept into our delivery process due to lack of trust.

So, how does DevOps help this problem? It’s simple really; the DevOps approach is to attack the TRUST issue head on while simultaneously reduce the task time (Tx) in order to shorten the overall time to delivery. For example, if we apply a DevOps practice of breaking work up into small chunks, getting it out to users early, and getting the feedback, we can immediately impact the overall TDELIVERY. That’s because we’re positively impacting the numerator and denominator with that practice. That is, by limiting the scope we make it easier to understand, less effort to transform to working code, test, and deploy, sooner to obtain feedback, and more likely to know if we’re on the right track.

Likewise for other practices!

The key is finding the balance of what practices to apply to maximize this equation for a given organization and their current bottlenecks & wastes.

DevOps practices address with three main impact points:

Speed to Value depends upon TRUST

TRUST is enabled by:

- Clarity: A clear definition of desired outcome(s)

- Collaboration: Team-wide communication and visibility

- Consistency: Systematic and repeatable steps

When a team can master these three elements the time to perform each task will shrink and degree of TRUST will increase. DevOps practices impact these trust building elements:

Clarity:

- Break work up into smaller chunks and iterate

- Seek a minimum viable product (MVP)

- Define clear outcomes, ‘sponsor’ users, and playback (Design Thinking)

- Reduce dependencies with loosely coupled architectures (containers, micro services, SOA)

Collaboration:

- Form cross functional teams (end to end)

- Use big visual radiators

- Have a ‘single source of truth’ for all development assets – Requirements, Code, Deployable Assets, Infrastructure as Code, etc

- Be transparent with metrics

- Plan and reprioritize frequently

- Actively seek user feedback

Consistency

- Automate, automate, automate

- Continually improve by replacing manual tasks with automation

Speed to Value will be enabled by eliminating those bottlenecks and waste that have developed due to lack of trust. The key for organizations is to recognize that the current practices are maxed out and not sustainable when trying to move at higher speed. There’s a perfect storm amassing with big data, analytics, cloud, mobile and IOT that will either drive customers to make a DevOps transformation quickly or be a victim of the storm. Try using this simple math to help organizations understand how to move ahead and how our IBM solutions can be applied to attain speed to value.

This year I have had the great experience to work with our DevOps CTO Sanjeev Sharma, our customer thought leaders such as Carmen DeArdo of Nationwide, and our field teams around the world addressing DevOps transformations. Sanjeev, Carmen and I decided to summarize our experiences in a three part blog. This is the first of that three part series.

To learn more about IBM’s DevOps approach, check out www.ibm.com/devops. Also, watch the replay of a recent webcast on Variable Speed DevOps, I had a chance to present on, with Sanjeev Sharma and Carmen DeArdo.

About the Author/ Lee Reid

Lee Reid, Senior IT Specialist, IBM, is a senior solution architect with focus on practical adoption of software engineering tools, software delivery solutions, application life cycle management, and DevOps. He has over 25 years of experience in software engineering, design, programming, testing, development, tools & methodology, team leadership, and strategic planning.

Lee Reid, Senior IT Specialist, IBM, is a senior solution architect with focus on practical adoption of software engineering tools, software delivery solutions, application life cycle management, and DevOps. He has over 25 years of experience in software engineering, design, programming, testing, development, tools & methodology, team leadership, and strategic planning.