If you are developing software in 2017, chances are you are looking at microservices-based architectures. Microservices is an approach to application development in which a large application is built as a suite of modular services. Containers are a natural fit because of the isolation and packaging they provide. Containers further enable speed, resource density, flexibility and portability.

To get the benefits of containers and microservices, look to a methodology known as the 12-factor app, a popular methodology for building “software-as-a-service apps that:

- Use declarative formats for setup automation;

- Have a clean contract with the underlying operating system, offering maximum portability between execution environments;

- Are suitable for deployment on modern cloud platforms;

- Minimize divergence between development and production, enabling continuous deployment for maximum agility;

- And can scale up without significant changes to tooling, architecture, or development practices.”

In this article, we will highlight implementing the paradigms stated for deploying application in containers using AWS primitives.

Developing Microservices

An effective way to achieve a clean contract in the system is by defining the boundaries for services is specific to your application’s design. The microservices define a clean contract in the application layer. The microservice can be tracked in a version control system such as Git or AWS CodeCommit, a fully managed source control service that makes it easy for companies to host secure and highly scalable private Git repositories, as an alternative to GitHub.

Isolation of crashes, isolation for security and independent scaling all need to be considered. Containers wrap application code in a unit of deployment that captures a snapshot of the code as well as its dependencies, thus extending portability. Microservices architecture inherently grant that if one micro piece of your service is crashing, only that part of your service will go down.

Docker provides the packaging of operating system, application and dependencies thus enabling portability. Running many Docker containers at scale is a hard problem of the order of O(n2). Amazon EC2 Container Service (ECS) is a highly scalable, high-performance container management service that supports Docker containers and enables Docker-based applications on a managed cluster of Amazon EC2 instances. Amazon ECS eliminates the need to install, operate and scale cluster management infrastructure, enabling a highly concurrent environment.

There are multiple container orchestration platforms that do a good job of running containers at scale, but managing them on the platform itself is a challenge. This requires the user to spend considerable time on operating the platform instead of focusing on the application.

While Docker enables clean packaging of software, the application should be written to be consistent across environments; environment dependencies should be removed from the code to enhance disposability.

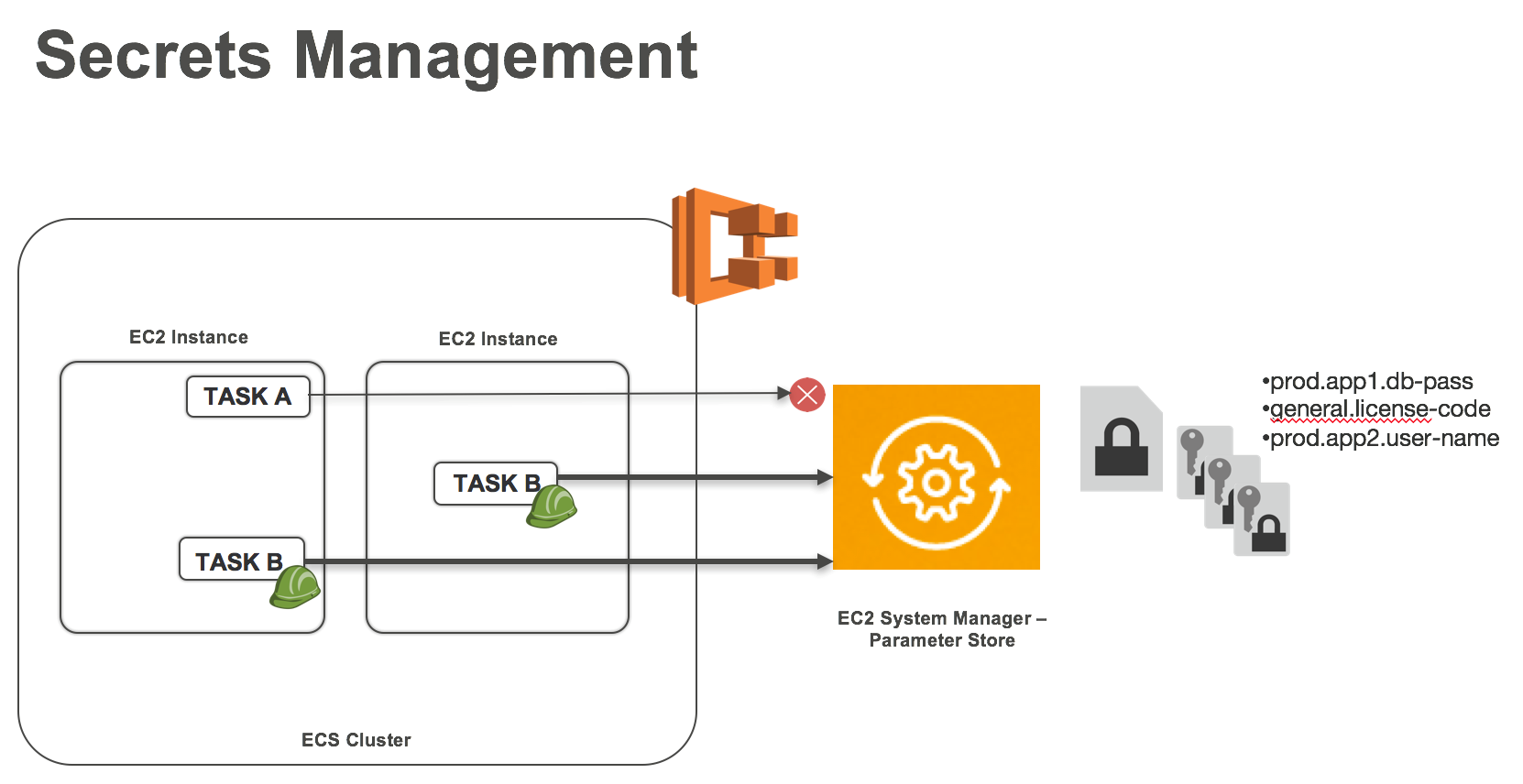

Parameter Store, a feature of Amazon EC2 Systems Manager, provides a centralized, encrypted store for sensitive information such as secrets and configuration. The service is fully managed, highly available and highly secure. Parameter Store is accessible using the Systems Manager API, AWS CLI and AWS SDKs. Secrets can be easily rotated and revoked. Parameter Store is integrated with AWS KMS so specific parameters can be encrypted at rest with the default or custom KMS key. Importing KMS keys enables you to use your own keys to encrypt sensitive data.

Figure 1: Storing Container Secrets in parameter store

Access to Parameter Store can be controlled by IAM policies and supports resource level permissions for access. An IAM policy that grants permissions to specific parameters or a namespace can be used to limit access to these parameters. CloudTrail logs, if enabled for the service, record any attempt to access a parameter. Containers deployed on Amazon EC2 Container Service (ECS), which provides orchestration for your containers, can access these secrets from the parameter store.

Amazon EC2 Container Service allows you to lock down access to AWS resources by giving each service its own IAM role.

Scalability

An ECS service can be configured to use service autoscaling to adjust its desired count up or down in response to CloudWatch alarms. Autoscaling gives an effective usage of the compute resources. Amazon ECS publishes CloudWatch metrics with the service’s average CPU and memory usage. These service utilization metrics can be used to scale the service up to deal with high demand at peak times and to scale the service down to reduce costs during periods of low utilization.

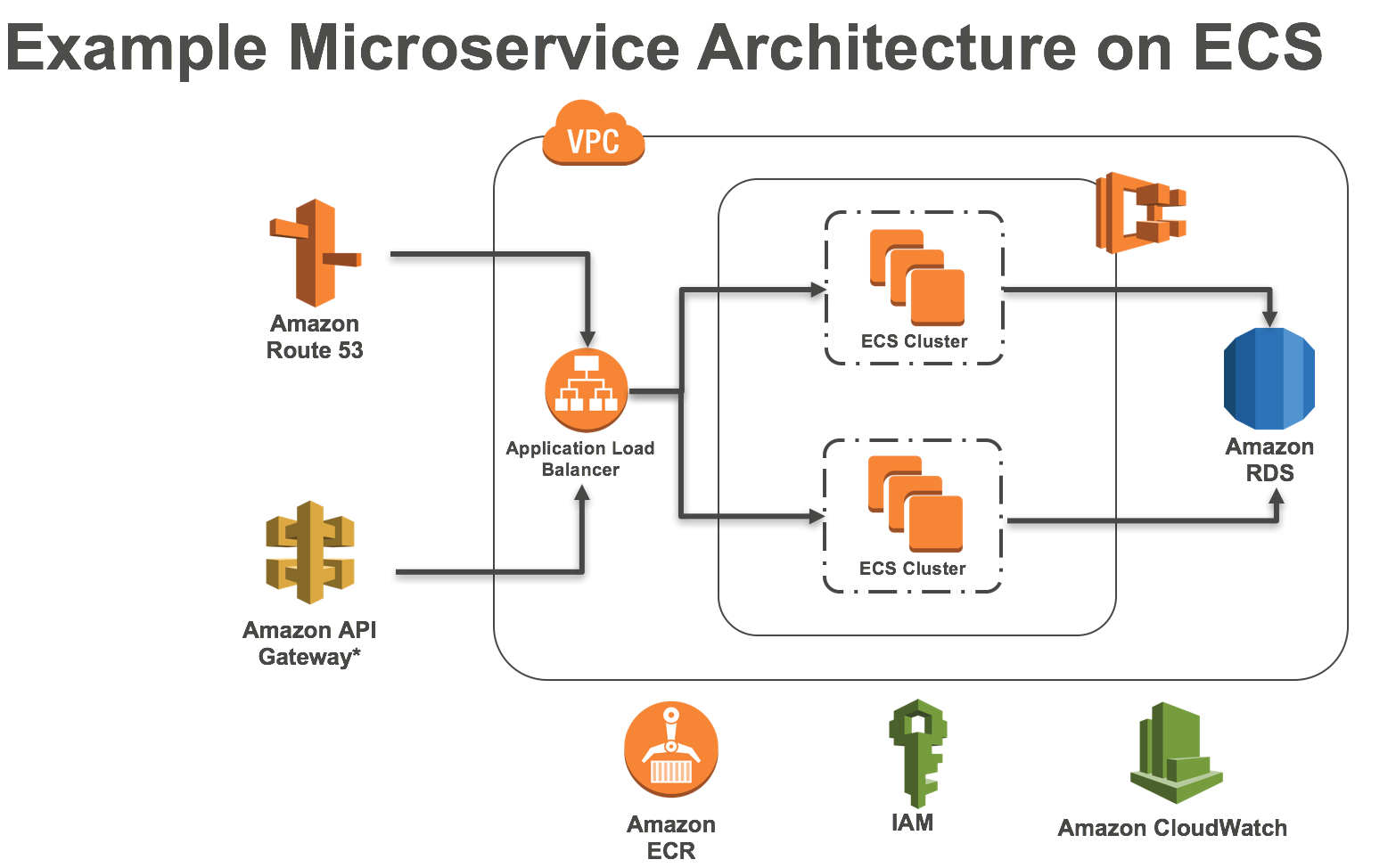

As services scale and run multiple applications on an ECS cluster, dynamic port-mapping becomes essential. Application Load Balancer offers a high-performance load balancing option that operates at the application layer and allows to define routing rules based on content. With Application Load Balancer, you can specify a dynamic host port to give your container an unused port when it is scheduled on an EC2 instance. The ECS scheduler will automatically add the task to the Application Load Balancer using this port.

Using AWS CloudFormation gives developers and systems administrators an easy way to create and manage a collection of related AWS resources such as Application Load Balancer, ECS Clusters, VPC, service definitions and task definitions.

As described above, using a secret management store such as the Parameter Store allows for the application to be executed in the execution environment as one or more processes. The services are stateless, share nothing and rely on a stateful backing service to persist data.

The services can rely on backing services such as data stores (Amazon RDS, DynamoDB, S3, EFS and RedShift), messaging/queueing system (SNS/SQS, Kinesis), SMTP services (SES) and caching systems (Elasticache). The code for a 12-factor app makes no distinction between local and third-party services.

Fig 2: An example microservices deployment on AWS.

Continuous Deployment

Delivering new iterations of software at a high velocity is a competitive advantage in today’s business environment. The speed at which organizations can deliver innovations to customers and adapt to changing markets is increasingly a pivotal attribute that can make the difference between success and failure.

Continuous deployment enables developers to ship features and fixes through an entirely automated software release process. Instead of batching up large releases over a period of weeks or months and conducting deployments manually, developers can use automation to deliver versions of their applications many times a day as new software revisions are ready for users. In the same way cloud computing abbreviates the delivery time of resources, continuous deployment reduces the release cycle of new software to your users from weeks or months to minutes. Various techniques such as rolling updates, blue-green and canary deployments can be used to enable a continuous delivery pipeline.

Updating a service in Amazon ECS is enabled at the scheduler level. A running service can be updated to change the number of tasks that are maintained by a service or which task definition is used by the tasks. When the service scheduler replaces a task during an update, if a load balancer is used by the service, the service first removes the task from the load balancer and waits for the connections to drain. AWS provides AWS CodeCommit, CodePipeline and CodeBuild to enable an efficient continuous delivery pipeline. The below reference architecture illustrates such a pipeline.

Fig 3: Continuous Delivery and Deployment

In the above reference architecture, the developer commits code into a CodeCommit, which triggers a CodePipeline. The CodePipeline branches into provisioning the infrastructure using AWS CloudFormation and builds a Docker image using CodeBuild. CodeBuild builds the Docker image and pushes it to ECR, while CloudFormation has deployed the ECS cluster. The ECS service, which is in autoscaling mode, picks the image and deploys. The same workflow works for updates, with the added step of an update stack. With update stack, the delta change is understood by CloudFormation and the update service replaces the containers with the new image.

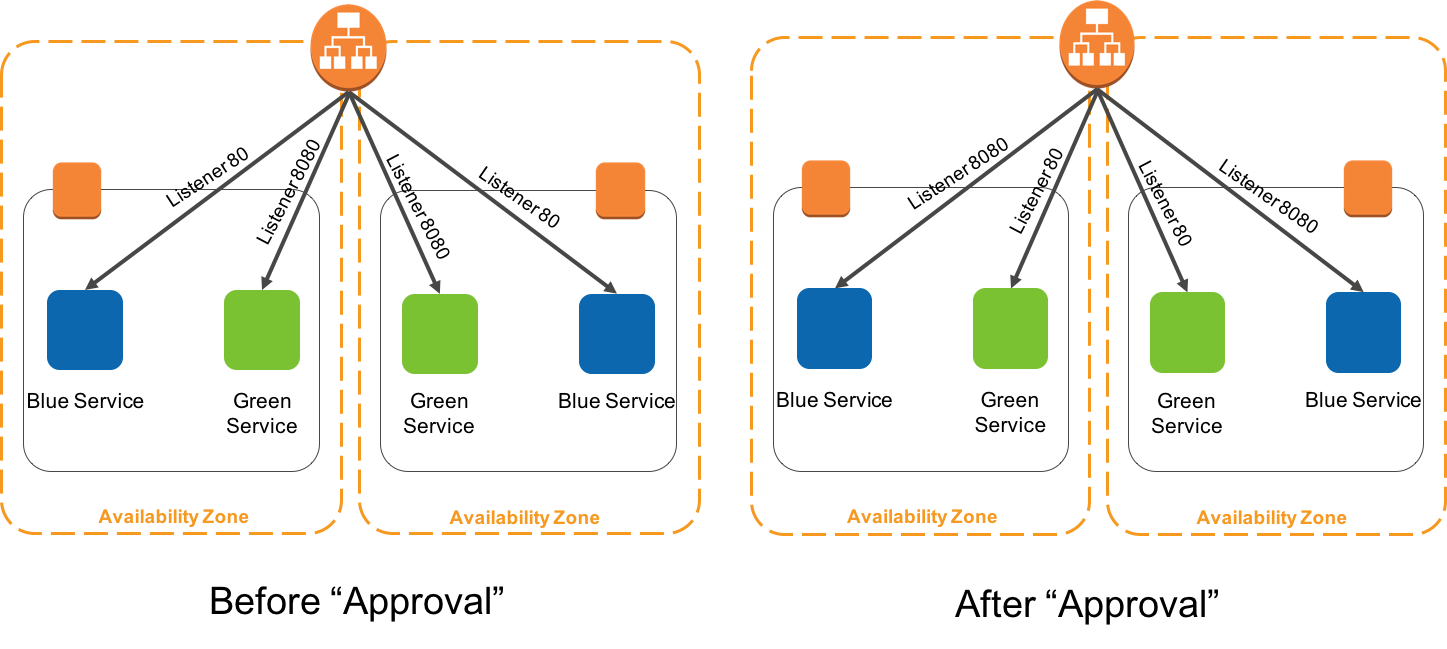

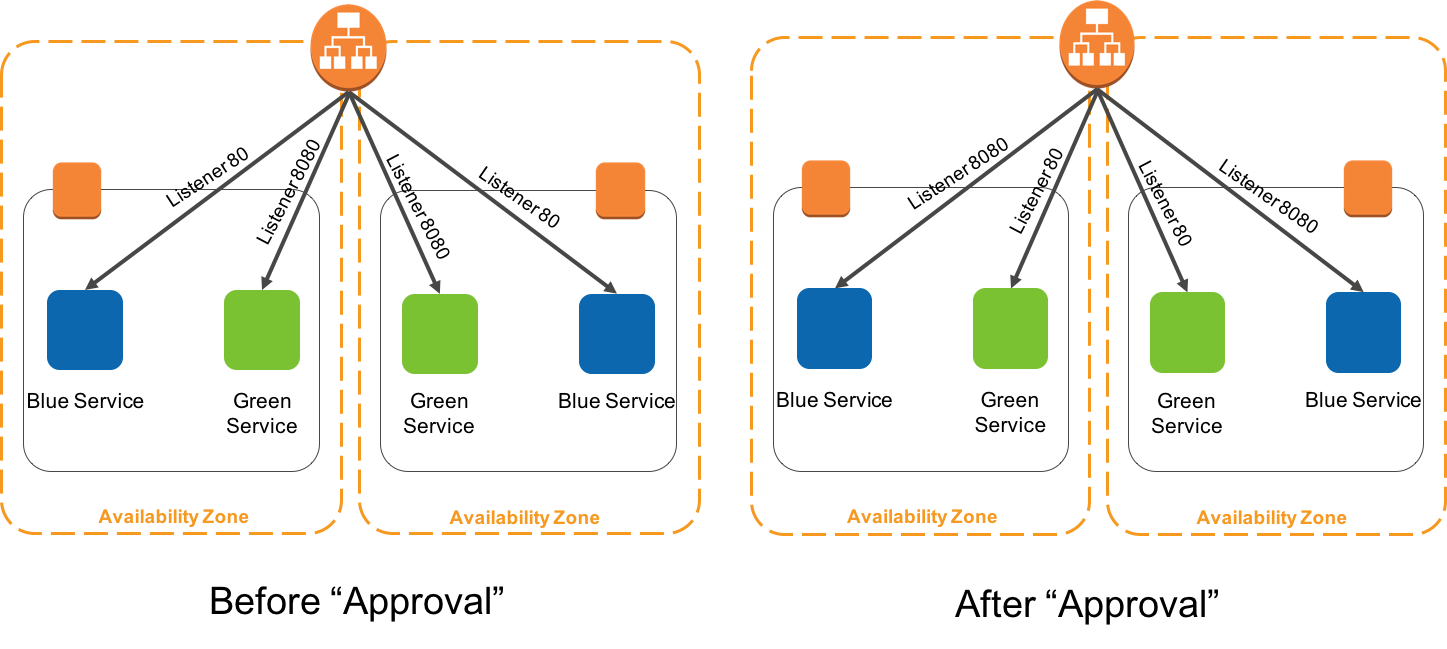

Blue/green deployments are a type of immutable deployment that help you deploy software updates with less risk. The risk is reduced by creating separate environments for the current running or “blue” version of your application, and the new or “green” version of your application. This type of deployment gives you an opportunity to test features in the green environment without impacting the current running version of your application. When you’re satisfied that the green version is working properly, you can gradually reroute the traffic from the old blue environment to the new green environment by modifying DNS. By following this method, you can update and roll back features with near zero downtime.

Fig 4. Implementing Blue Green with ECS

As demonstrated in the above reference architecture, a target group and path-based routing at the application load balancer could be used to direct traffic from the ALB to a blue (live) service and a green (test) service. Once the testing is completed, the target groups could be switched to redirect all live traffic to the green (live) service and all internal traffic to blue (deprecated) service.

Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.

Fig 5. Implementing Canary deployment for Containers on AWS

In the above reference architecture, a container can emit CloudWatch events that can be used to determine whether to increase the flow of traffic. When a container specifies it can take more traffic, the Amazon R53 weighted routing can increase the traffic to the application load balancer and thus to the container. As canaries proliferate, maintaining the state of each canary is important for rollback and deployment. DynamoDB and a lambda filter allows the state to be stored in the pipeline based on CloudWatch events. AWS Step Function helps coordinate the components of the pipeline using visual workflows.

Another consideration is that the development, staging and productions system should be as similar as possible. By setting up an efficient deployment pipeline this can be achieved. The environment from an operational and a development perspective should be closely aligned in terms of the operating system, Docker version, shared libraries and microservices deployed.

Developers can also run one-off admin processes as a task, in an identical environment as the regular long-running processes of the app. They run against a release, using the same codebase and configuration as any process run against that release. Admin code can be shipped with application code to avoid synchronization issues.

Monitoring and Governance

Application logs are useful for many reasons. They are the primary source of troubleshooting information. In the field of security, they are essential to forensics. Web server logs are often leveraged for analysis (at scale) to gain insight into usage, audience and trends. A 12-factor app never concerns itself with routing or storage of its output stream. Streaming logs collected from the output streams of all running processes and backing services to centralized logging such as AWS CloudWatch. Amazon ECS provides a logdriver on the task definition that ships streams logs to CloudWatch.

Centralized logging has several benefits: Amazon EC2 instance’s disk space isn’t being consumed by logs and log services often include additional capabilities that are useful for operations. For example, CloudWatch Logs includes the ability to create metrics filters that can alarm when there are too many errors and integrates with Amazon Elasticsearch Service and Kibana to enable you to perform powerful queries and analysis.

While debugging issues, tracing and troubleshooting is an important aspect. The AWS X-Ray daemon is a software application that gathers raw segment data and relays it to the AWS X-Ray API. The daemon works in conjunction with the AWS X-Ray SDKs and must be running so that data sent by the SDKs can reach the X-Ray service. You can easily run AWS X-Ray as a daemon on a ECS cluster to enable tracing of the containers.

Governance is a key aspect of software design and development. Maintaining a consistent style and standard along with operation SLA is paramount to enterprise deployments. SLAs around security, recovery time objective and recovery point objective must be considered. AWS Service Catalog could be used for listing microservices stacks in a catalog, with access control and notifications mechanism.

Conclusion

With AWS primitives, you can utilize the 12-factor app pattern to achieve scalable, fault-tolerant and highly available microservices with continuous deployment. You can find further resources at: https://aws.amazon.com/ecs/getting-started/ and https://aws.amazon.com/ecs/resources/

Arun Gupta is a Principal Open Source Technologist at Amazon Web Services. He has built and led developer communities for 12+ years at Sun, Oracle, Red Hat and Couchbase. He has deep expertise in leading cross-functional teams to develop and execute strategy, planning and execution of content, marketing campaigns, and programs. Prior to that he led engineering teams at Sun and is a founding member of the Java EE team. He has extensive speaking experience in more than 40 countries on myriad topics and is a JavaOne Rock Star for four years in a row. Gupta also founded the Devoxx4Kids chapter in the US and continues to promote technology education among children. A prolific blogger, author of several books, an avid runner, a globe trotter, a Docker Captain, a Java Champion, a JUG leader, NetBeans Dream Team member.

Asif Khan is a Tech leader with Amazon Web Services. He provides technical guidance, design advice and thought leadership to some of the largest and successful AWS customers and partners on the planet. His deepest expertise spans application architecture, containers, devops, security, machine learning and SaaS business applications. Over the last 12 years, he’s brought an intense customer focus to challenging and deeply technical roles in multiple industries. He has a number of patents and has successfully led product development, architecture and customer engagements.