Eggplant this week announced it has extended the capabilities of its application testing platform infused with machine and deep learning algorithms to now include recommendations to help improve tests.

In addition, the latest update to the Digital Automation Intelligence (DAI) Platform makes it easier to onboard new members to an application testing team.

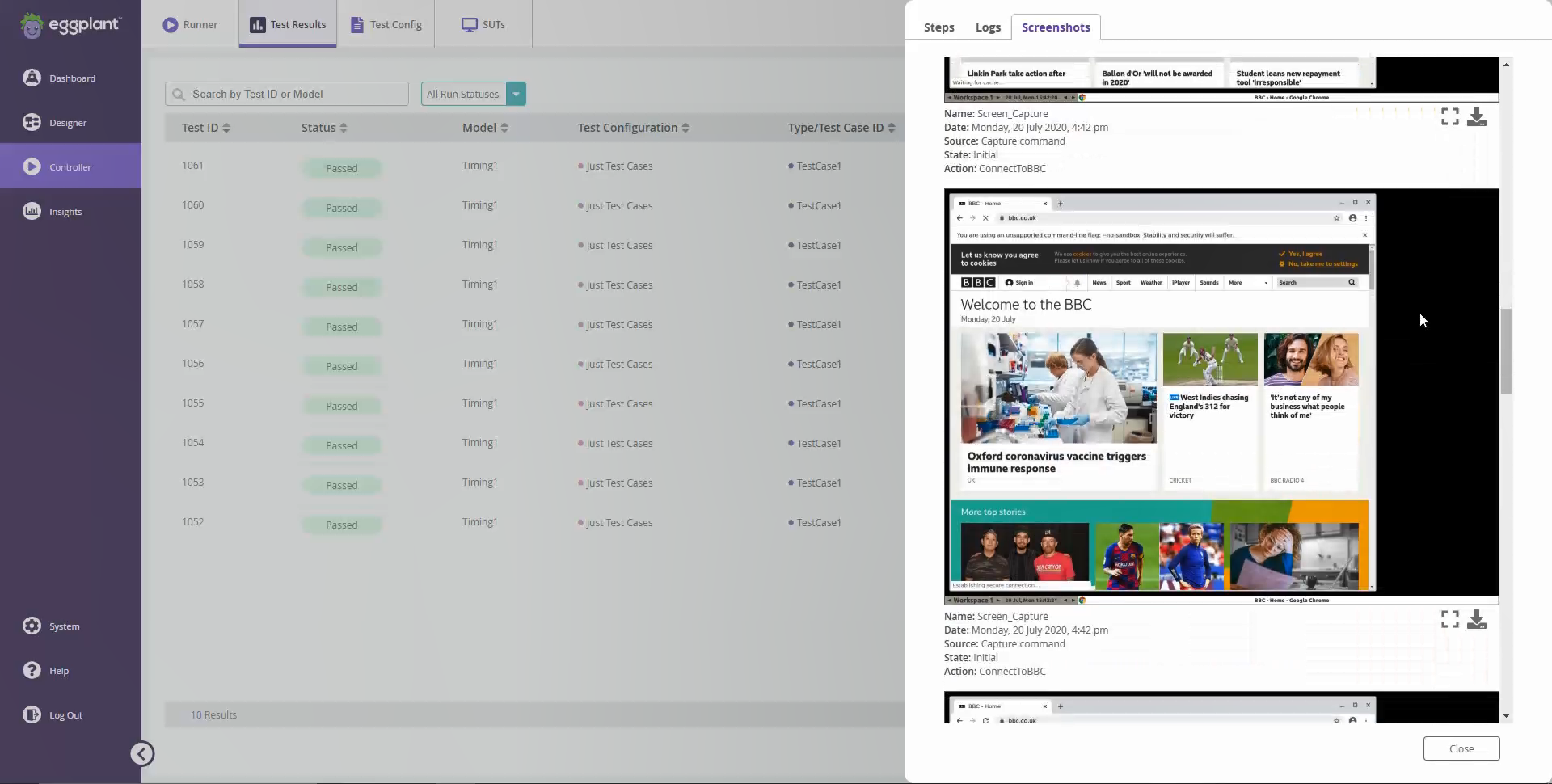

Finally, Eggplant is making it easier to capture snapshots of the testing environment to more easily identify areas that require additional test development.

Eggplant COO Antony Edwards said the goal is to extend a low-code platform that employs modeling tools to make testing more accessible to both developers and end users. There is simply not enough testing expertise available to keep pace with the rate at which applications are now being developed thanks mainly to the rise of DevOps, he noted.

By employing a testing platform based on a low-code platform that enables tests to be created using modeling tools, it becomes feasible to include more end users in the testing process, he said. Generally, end users have the best understanding of how an application should function, so including them in the testing process also yields higher quality applications that will be accepted sooner, Edwards added.

Eggplant, which was just acquired by Keysight Technologies, has been making a case for applying artificial intelligence (AI) to application testing to increase the overall capacity of testing teams. Using AI makes it possible to conduct more than 100 tests in parallel because most of the mundane tasks associated with building and updating tests have been automated, said Edwards.

There will always be a need to include humans in the testing process, but Edwards noted AI can augment the capabilities of testing teams in a way that not only allows more tests to be conducted faster but also ensures more resources are allocated to writing code, versus continually debugging applications.

Many IT organizations are now caught up in a vicious DevOps cycle. They can develop more applications faster, but the more applications they develop the more time they need to spend debugging them. Ultimately, organizations find themselves spending more time debugging existing applications than developing new ones. AI-infused testing tools break that cycle by identifying issues before applications are deployed, which is when they are easiest and least costly to fix.

Breaking that cycle has become more of an imperative because in the age of microservices, the overall application environment is becoming more complex, Edwards said. Most testing teams are not going to be able to create the tests needed to assure the quality of those applications unless it becomes possible to generate more sophisticated tests using modeling tools that automate much of the process.

Naturally, it may take some organizations time to trust the tests and recommendations generated by an AI platform. However, in the wake of the economic downturn brought on by the COVID-19 pandemic, it’s apparent DevOps processes need to be streamlined. Arguably, one of the best places to begin that process is by automating as much of the application testing process as possible.