Modern DevOps pipelines handle an intricate web of continuous integration/continuous delivery (CI/CD), dynamic cloud infrastructure and rigorous security demands. As complexities in the pipeline increase, traditional automations struggle to maintain necessary velocity. AI-driven DevOps represents a paradigm shift that embeds machine learning (ML) and intelligent automation into pipelines to create systems that detect problems and heal themselves while gradually optimizing performance.

Amazon SageMaker and Amazon Bedrock represent two AWS tools that turn this vision into reality. This article demonstrates how these services revolutionize CI/CD operations, infrastructure management and security through practical examples that include self-healing pipeline anomaly detection and generative AI (Gen AI) remediation capabilities. In this article, we will examine security and governance challenges in AI-enhanced DevOps systems and upcoming industry directions.

The Promise of AI-Driven DevOps

The core objective of DevOps has been to optimize software delivery processes through automation and teamwork. AI extends this approach by incorporating predictive and adaptive features. AI-powered pipelines transform operations throughout CI/CD and infrastructure management and security by delivering these beneficial outcomes:

Intelligent CI/CD Automation

The combination of ML models with build and test data enables predictive failure detection which takes place before workflow disruptions occur. The ability to detect slow test suites and flaky results allows teams to take corrective action before broken builds cause disruptions. A predictive model would detect a 20% increase in build duration to alert about potential bottlenecks at an early stage.

Self-Optimizing Infrastructure

AI enables cloud environments to predict traffic patterns so that they can optimize resources before the issue occurs. An AI-controlled system provides better efficiency and cost reduction through predictive auto-scaling (which scales resources before traffic increases and consolidates them during periods of low activity).

Proactive Security (DevSecOps)

The combination of AI technology with DevSecOps practices enables proactive security measures. AI systems identify security vulnerabilities through code modifications and dependency analysis and threat intelligence data. The system detects security risks by identifying abnormal API call patterns in microservices and alerting teams about dangerous dependencies to move security left and minimize production threats.

These capabilities create foundations for secure pipelines that can heal themselves. AWS SageMaker and Bedrock offer essential tools that enable DevOps teams to implement these capabilities.

AWS SageMaker and Bedrock: A Complementary Pair

SageMaker and Bedrock operate in different ways to support AI-driven DevOps functions, yet they work together to enhance overall performance.

Amazon SageMaker

Amazon SageMaker functions as a managed ML platform that enables users to build and deploy their custom models through simplified training processes. The platform includes built-in algorithms, including random cut forest for anomaly detection, and handles infrastructure management so that teams can concentrate on developing DevOps-specific logic for deployment success prediction and build metric analysis.

Amazon Bedrock

Amazon Bedrock launched in 2023 as a platform that enables users to access pre-trained foundation models (Amazon Titan and Anthropic Claude) through a straightforward API. The platform specializes in generative operations such as remediation playbook creation and log summarization without demanding model training from users. The combination of AWS services Lambda and CloudWatch creates a seamless integration with Bedrock, making it an ideal choice for pipelines.

SageMaker delivers tailored ML solutions, while Bedrock provides immediate, complex decision-making capabilities. Let’s see how they work in practice.

Building a Self-Healing CI/CD Pipeline with SageMaker

A self-healing pipeline detects and resolves issues autonomously, minimizing downtime and manual effort. Here’s how to build one using SageMaker for anomaly detection:

1. Gather Pipeline Data: Collect metrics like build duration, test failure rates and infrastructure usage (e.g., CPU/memory). Store the data in Amazon S3 or CloudWatch Logs for analysis.

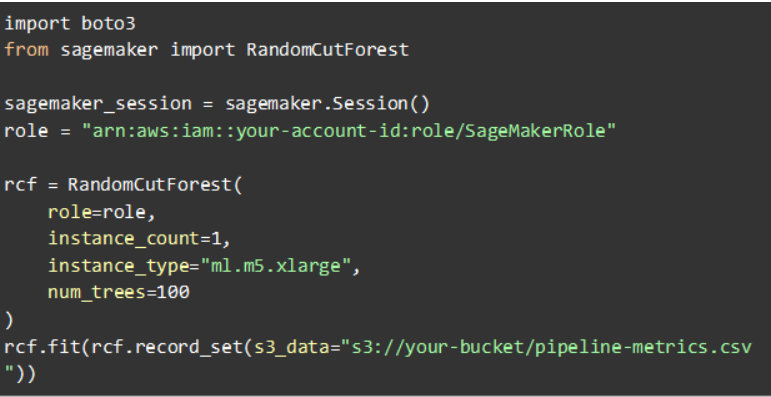

2. Train an Anomaly Model: Use SageMaker’s Random Cut Forest algorithm to establish a ‘normal’ pipeline behavior baseline. Here’s a simplified example:

The model learns from historical data (e.g., three months of build times) and flags outliers, like a sudden jump in test failures.

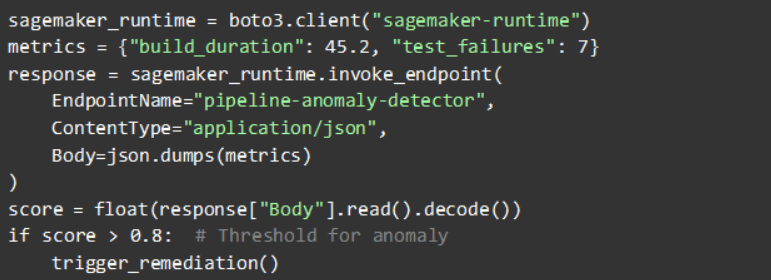

3. Integrate with CI/CD: Deploy the model as an endpoint and invoke it from your pipeline. An AWS Lambda function can check metrics post-build:

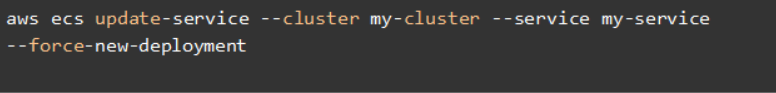

4. Automate Remediation: Define actions like rerunning tests on a fresh agent if a flaky environment is detected, or rolling back a deployment if error rates spike:

5. Continuous Learning: Periodically retrain the model with SageMaker Pipelines to adapt to changing norms (e.g., longer builds due to added tests).

This setup turns a static pipeline into a proactive system that catches issues such as creeping test durations before they derail releases.

Enhancing with Bedrock: Automated Remediation and Decisions

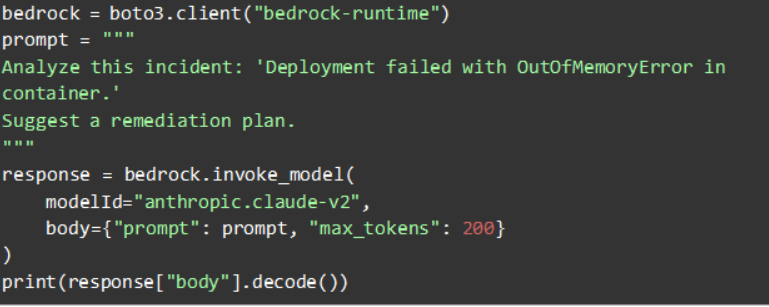

The detection part only represents half of the solution because Bedrock demonstrates its strength through issue resolution. Bedrock’s generative AI capabilities enable it to examine incidents and generate possible solutions. A deployment failure caused by an ‘OutOfMemoryError’ requires analysis.

The output might be:

- Increase container memory limit to 2 GB.

- Rerun deployment.

- Monitor memory usage with CloudWatch

This playbook can be posted to Slack or automated via a Lambda function, adjusting the ECS task definition.

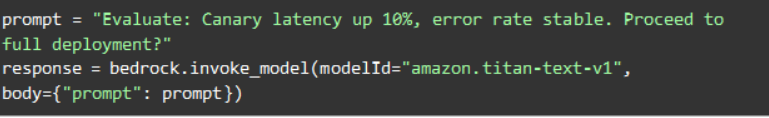

Bedrock can also assist in deployment decisions. Feed it canary metrics (e.g., latency up 10%) and ask:

The model might respond: ‘Proceed — a 10% latency increase is within acceptable limits’. This extends traditional rules with contextual reasoning, perfect for ChatOps integrations where teams ask a Bedrock-powered bot for guidance.

The AWS’ own blueprint for code remediation using Bedrock to generate patches from CloudWatch errors shows the potential. It creates pull requests automatically, blending detection and correction seamlessly.

Securing AI-Augmented Pipelines

The integration of AI systems requires organizations to adopt strict DevSecOps security practices.

Security Integration: AI technology speeds up vulnerability detection tasks, such as identifying OWASP Top 10 issues in code, while outputs must undergo review and scanning processes like Bedrock’s fixes. ML systems track runtime logs to detect intrusions.

Access Control: SageMaker and Bedrock require identity and access management (IAM) roles with minimum privileges to establish access control. Data transmission requires encryption, while all sensitive information must be removed from prompts.

Model Governance: Use SageMaker’s Model Registry to track versions and maintain prompt versioning for Bedrock as well.

Transparency: The system should maintain transparent logging of AI decisions, which would read ‘Rollback recommended due to 30% error spike’ for auditing purposes and trust development. The process begins with human verification before implementing automation when trust levels increase.

Robustness: The system must undergo testing with edge cases to stop both false positives and LLM ‘hallucinations’ from occurring. The transition of AI functions should progress from advisory to autonomous mode.

These steps ensure AI enhances security without introducing new risks, maintaining compliance and reliability.

Key Takeaways

Transformation: AI-driven DevOps delivers proactive issue detection, self-healing and continuous optimization capabilities that span CI/CD infrastructure and security.

Tool Synergy: The combination of SageMaker and Bedrock enables organizations to use custom ML for anomaly detection and Gen AI for remediation playbooks.

Practicality: Self-healing pipelines exist as current technology, which decreases both toil and system downtime.

Security: AI technology enhances DevSecOps security, but organizations must implement governance structures to preserve trustworthiness.

Future Trends

The adoption of AI technology in DevOps operations continues to gain momentum. The future will bring smarter CI/CD tools that integrate ML capabilities and advanced AIOps for infrastructure management and Gen AI, which will draft everything from IaC to tests. AI shepherds will emerge as engineers who specialize in refining complex system tools. Organizations that implement these technologies first will establish themselves as leaders through their accelerated and dependable pipelines.

Conclusion

The combination of SageMaker and Bedrock enables DevOps teams to develop secure self-healing pipelines through AI harmonization, which transforms software delivery processes. These tools enable teams to speed up innovation through anomaly detection and automated fixes, which maintain reliability. AI will expand its function as a DevOps co-pilot as the technology continues to advance. Your organization should deploy an AI-powered pipeline now to become a leader in the industry.