There’s a piece of conventional wisdom that runs rampant in the tech-o-sphere: You can’t teach an old dog new tricks. Supposedly, once people become enamored with a technology and use it for a long period, they are loathe to adopt new ones. We’ve all heard it, and some believe it. Me? I not buying it any more than I believe that you can’t teach a new dog old tricks. From where I stand, a good trick is a good trick, period!

So let’s talk about a really good trick: mainframe computing. It’s technology that is used by many business sectors—airlines, insurance companies and banks. And, it’s been designed from inception to support interaction from hundreds—if not thousands—of users. Yet, many perceive the trick to be old dog, that’s it’s not applicable to modern computing.

Actually, the opposite is true. It’s an important trick that should be used by any dog, new or old. It’s a question of adaptability: how to make the trick useful in any circumstance.

Let’s put the trick-and-dog analogy aside and talk directly. Companies are sitting on a boatload of mainframe assets that can and should be leveraged for use in this world of mobile devices, APIs, microservices and speed-of-light DevOps deployment practices. The question is, how?

To make mainframe technology viable in modern cloud-based paradigms, it must satisfy four conditions. The technology must:

- Support information exchange using modern API standards;

- Support coding in modern programming environments;

- Support automated testing throughout the deployment pipeline; and

- Be deployable according DevOps best practices.

IBM Z, a mainframe-based technology, has done a great job of meeting these conditions. The various products and toolsets that IBM provides for IBM Z technology make working with IBM Z in today’s cloud computing environment surprisingly easy. Let’s take a look at the details.

Supporting REST APIs for Information Exchange

REST is fasting becoming the standard by which information is exchanged between systems over the internet. As mobile devices and the internet of things grow as the means by which users interact with applications, the data these applications require is accessed in real time using REST. Today, REST is a first-class citizen in IBM Z technology. A key feature of IBM Z Application Discovery and Delivery Intelligence (ADDI) is the capability to discover applications running on IBM Z hardware and identify them as potential REST API candidates. Support for REST makes IBM Z applications consumable to a wide variety of computing devices. Also , supporting REST means that IBM Z applications integrate easily with standard DevOps testing and deployment practices.

ADDI provides the support for REST that allows IBM Z to be an agnostic resource on the internet

ADDI provides the support for REST that allows IBM Z to be an agnostic resource on the internet

In the world of modern, cloud-based computing REST is the lingua franca of information exchange. Supporting REST makes IBM Z data and transactions available to the modern digital ecosystem.

Support for COBOL and PL/1 Programming Using the Eclipse IDE

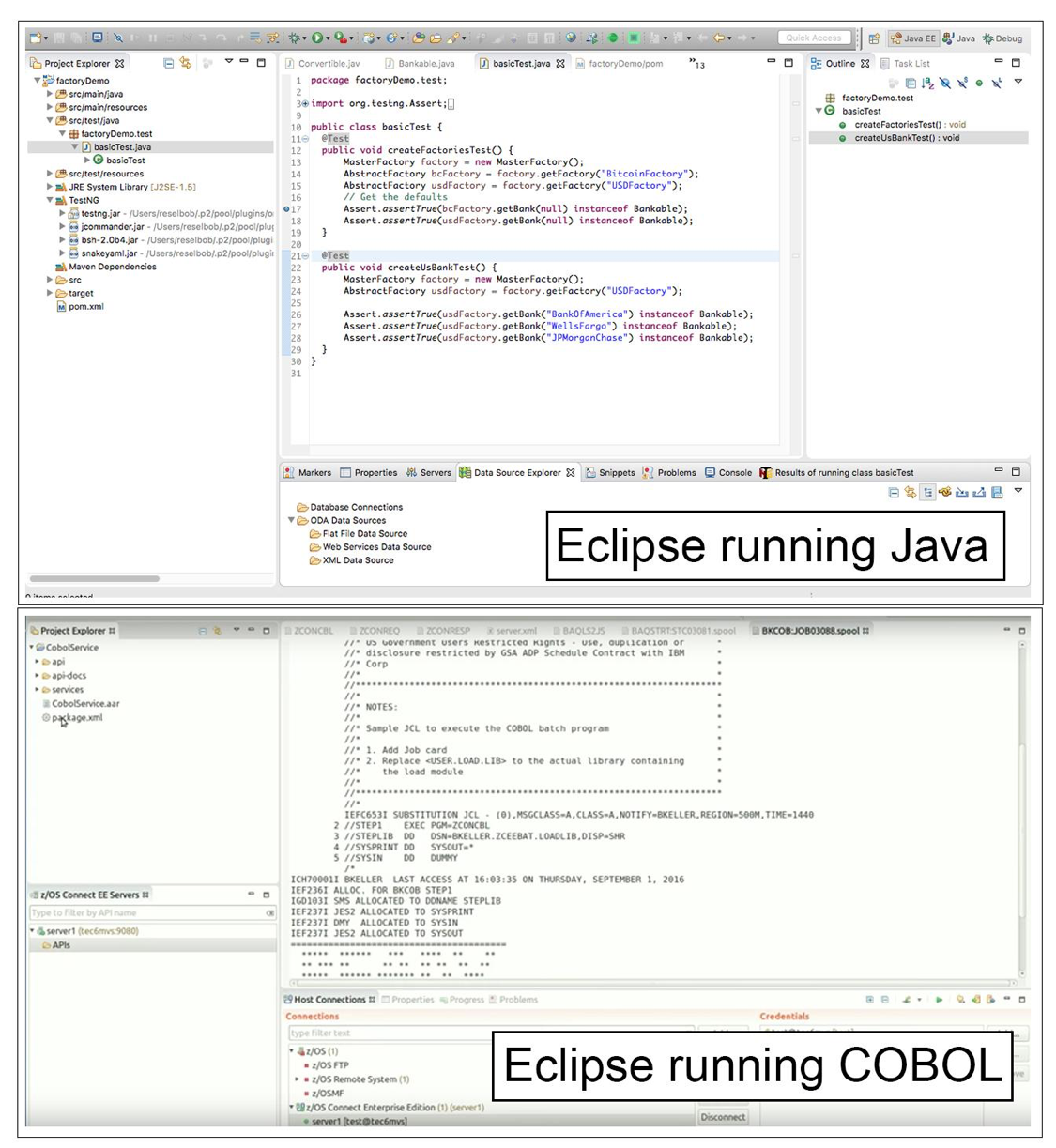

The IBM Explorer for z/OS, which is part of the Application Delivery Foundation for z Systems (ADFz) tool suite, allows COBOL and PL/1 developers to code in the Eclipse Integrated Development Environment (IDE). Eclipse is well-known to a broad range of developers working in languages such as Java, PHP, Node and Python. Using Eclipse to do IBM Z programming under COBOL and PL/1 allows developers to have a shared programming experience and increase their productivity by an average of 15 percent.

Although the actual lines of code the developer creates will be particular to the developer’s programming language of choice, the unit testing and debugging experience Eclipse provides is similar regardless of language. A developer uses Eclipse to unit test code as it’s being developed. If the test fails, the developer sets breakpoints and starts the debugging session to inspect the code at runtime. The way code-test-code-debug is conducted in Eclipse is consistent. Thus, the skills acquired using the Eclipse IDE are easily transferable between languages.

Although the actual lines of code the developer creates will be particular to the developer’s programming language of choice, the unit testing and debugging experience Eclipse provides is similar regardless of language. A developer uses Eclipse to unit test code as it’s being developed. If the test fails, the developer sets breakpoints and starts the debugging session to inspect the code at runtime. The way code-test-code-debug is conducted in Eclipse is consistent. Thus, the skills acquired using the Eclipse IDE are easily transferable between languages.

Having all developers work in a common development environment creates a shared experience—an important step toward breaking down organizational silos. Reducing the silos that isolate knowledge workers from each other so they can collaborate better and faster across teams and geographies, combined with deploying code in an automated CI/CD pipeline, significantly speeds a company’s ability to get code to market while also making collaboration easier across teams and across geographies.

Support for a Wide Range of Testing Techniques

For a software development framework to be viable in a CI/CD infrastructure, it must support a wide variety of testing capabilities. In addition, testing needs to be verified via automation. As mentioned above, IBM Explorer for z/OS allows developers to create unit tests on COBOL and PL/1 code. Once unit tests are created, they can be automated for use throughout the development pipeline. Test reuse via automation speeds up the deployment process significantly.

However, testing at the code level is not enough. As code moves through the modern deployment pipeline, it must be subjected to load and performance testing. Test engineers accustomed to using mainstream tools such as Apache JMeter or Rational Performance Tester will have little problem using IBM Z Workload Simulator for z/OS to do load and performance testing. The concepts behind load and performance testing are similar, regardless of the language and environment driving the development process. For example, Workload Simulator for z/OS allows test engineers to emulate hundreds, if not thousands, of users to exercise the software under test. Being able to create stress on a system in a controlled, reportable manner is critical for getting good performance testing metrics.

Supporting the DevOps Deployment Practices

Basic to DevOps best practices is the notion of releasing quality software quickly and incrementally. Instead of waiting months to do a release that has many features, the DevOps practice is to release one feature at a time, as quickly as possible. In the DevOps world, release cycles are no longer a matter of weeks and months, but rather days, if not hours. To achieve the fast release cycles emblematic of DevOps, management of the deployment pipeline must be automated.

However, when it comes to mainframe assets there is a hitch in terms of deployment units. In the microcomputing software environment, deployment units typically take the form of an executable file, supporting dependency file or script file such as .exe, .jar, .js or .py. Also, given the rise of container technology, the deployment unit can be the container itself. In terms of IBM Z, the deployment units are the file and the job.

Engineers at IBM Z have taken the clever approach to managing asset deployment. File and jobs have become abstracted deployment units accessible via the IBM Explorer for z/OS Atlas APIs. Thus, IBM Z components can be treated as any other artifact in the deployment pipeline. DevOps engineers use the APIs exposed by Atlas to cancel a job or delete an associated file. Standardizing direct access to the IBM Z deployment pipeline using REST APIs gives DevOps engineers the flexibility required to get working code released quickly.

DevOps engineers not only can automate the pipeline, but should ad hoc intervention be required, they also can use the Atlas APIs to make the changes required at a moment’s notice. Combining the Atlas APIs with ADDI’s ability to collect, correlate, calculate and produce mission-critical application and testing health metrics, as well as trend analytics, makes IBM Z a first-order participant in the world of cognitive DevOps.

Putting It All Together

One of the great things that the internet has done is to create a standardized way by which any computing system can interact with another. As long the system supports REST, regardless of whether it’s a smartwatch, a cellphone or a large-scale computing environment such as IBM Z, it’s a leveragable asset on the internet. And, if the system meets the conditions that define DevOps best practices, it can be managed with the degree of control and predictability required to participate in the breakneck release cycles that are part of modern software development. It really doesn’t matter whether the system is new or old, as long as it can adapt to present demands. Adaptability is key to longevity, no matter the age of the dog or the trick.